Manim Community v0.19.0

Interactive visualization for this lecture available here

In the last lecture we considered approximating functions of the form:

\[ y=f(\mathbf{x}), \quad \text{Input: } \mathbf{x} \in\mathbb{R}^n \longrightarrow \text{ Output: }y \in\mathbb{R} \]

In that setup our function takes in a vector and produces a real number as an output (for example a miles per gallon rating).

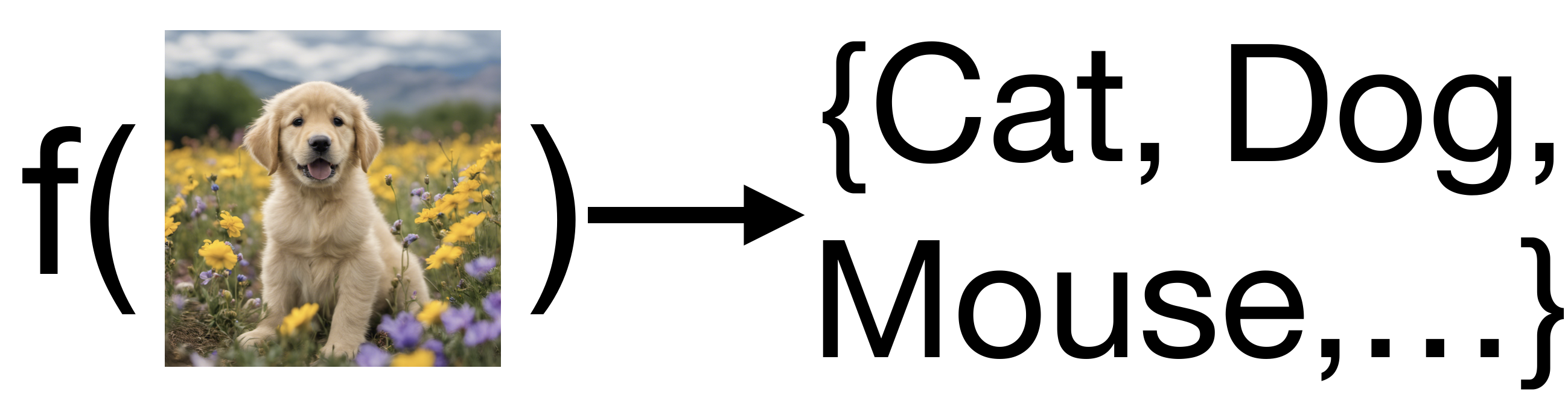

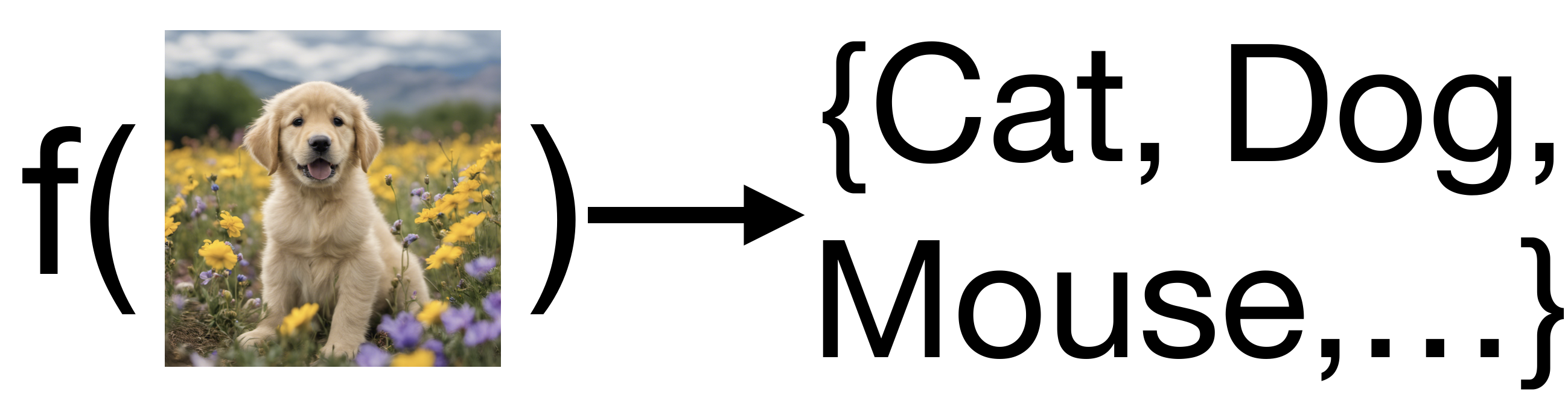

In many real-world problems, the output we want to model is not a continuous value, but a categorical value, meaning the function produces one choice from an unordered of possible outputs. A well-studied example of this kind of prediction is labeling; we might want to assign a label to an image based on the image’s content.

We call the prediction of categorical outputs classification. The output is often also called the class of the observation.

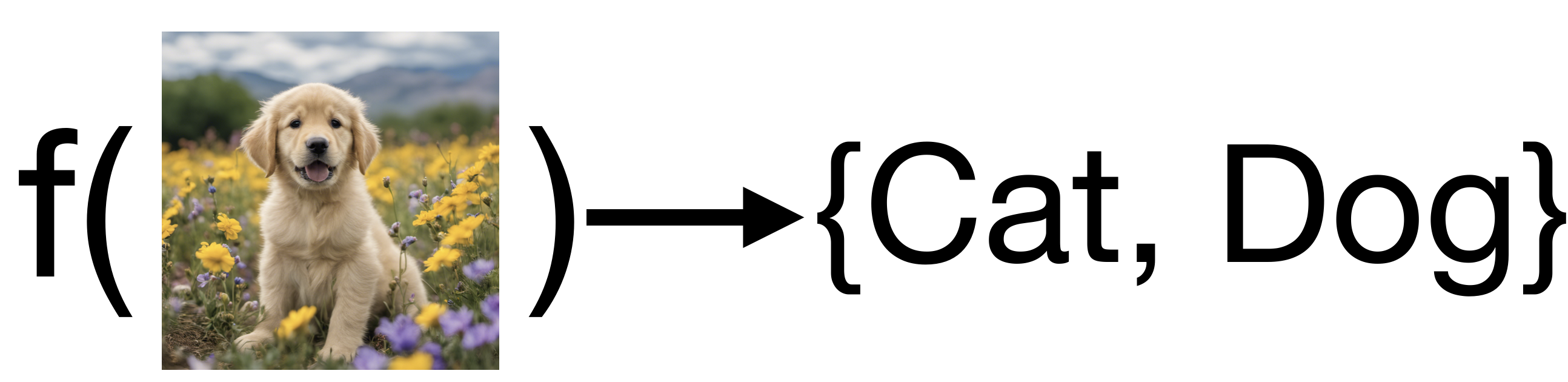

In the simplest binary case our function produces one of two possible outputs.

For example: consider the problem of labeling images as containing either cats or dogs. Conceptually we would like a function that maps images to either a cat label or a dog label:

For convenience and generality, we will typically use the set \(\{0, 1\}\) to denote the possible outputs for a binary classification function. Therefore in general we are considering functions of the form:

\[ y=f(\mathbf{x}), \quad \text{Input: } \mathbf{x} \in\mathbb{R}^n \longrightarrow \text{ Output: }y \in \{0, 1\} \]

We can assign these outputs to correspond to our actual target labels. For instance we might say that \(0 = \textbf{"cat"}\) and \(1=\textbf{"dog"}\).

As a simpler example, let’s again consider the fuel efficiency example from the previous lecture. Perhaps our company has set a target fuel efficiency of 30 miles per gallon for our new model and we want to predict whether our design will meet that target. In this case our inputs will be the same as before, but our output will become a binary label:

\[ \text{Input: } \mathbf{x}_i= \begin{bmatrix} \text{Weight} \\ \text{Horsepower} \\ \text{Displacement} \\ \text{0-60mph} \end{bmatrix}, \quad \text{Output: } y_i = \begin{cases} 1: \text{Meets target } (MPG \geq 30) \\ 0: \text{Fails to meet target } (MPG < 30) \\ \end{cases} \]

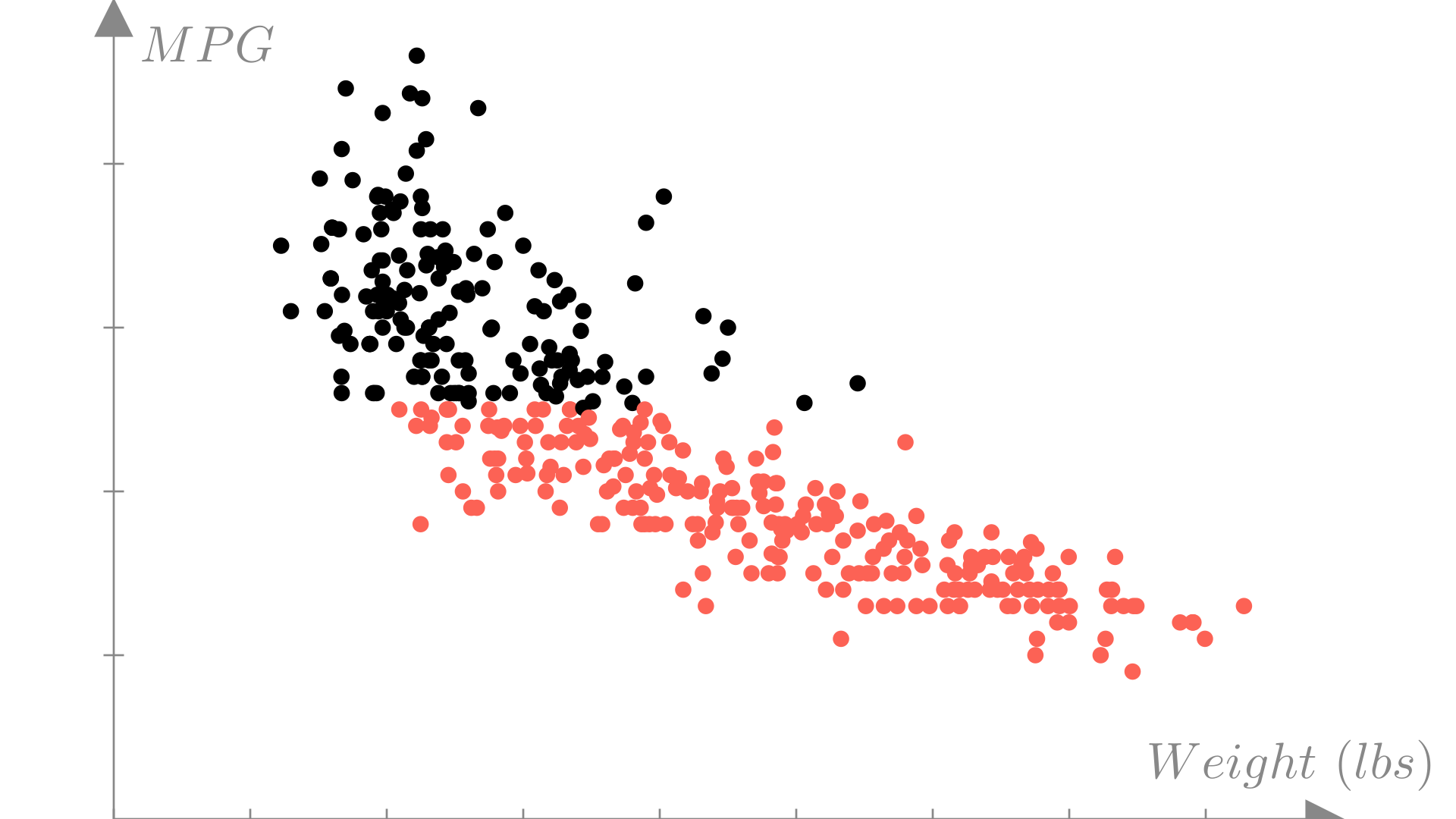

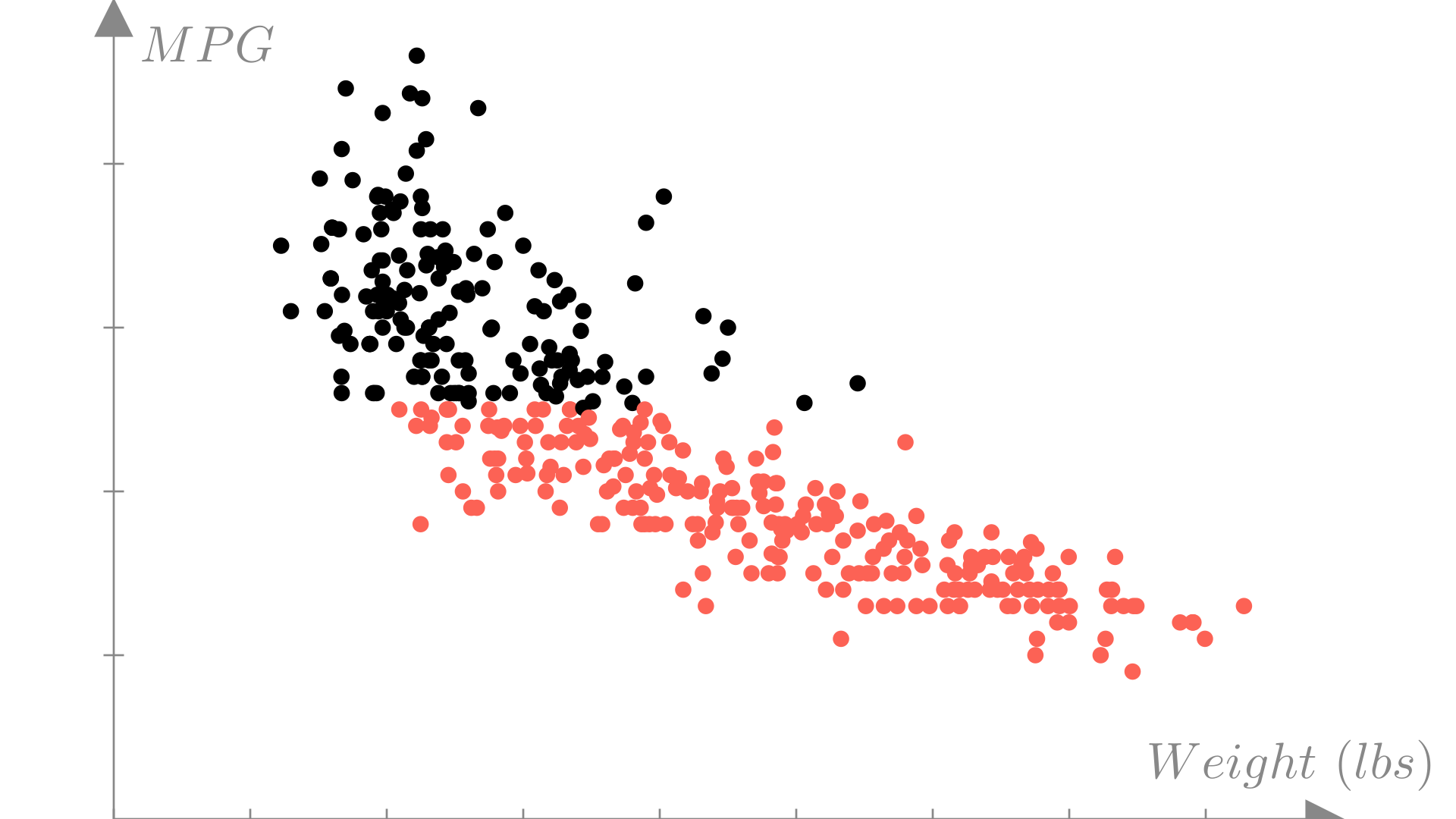

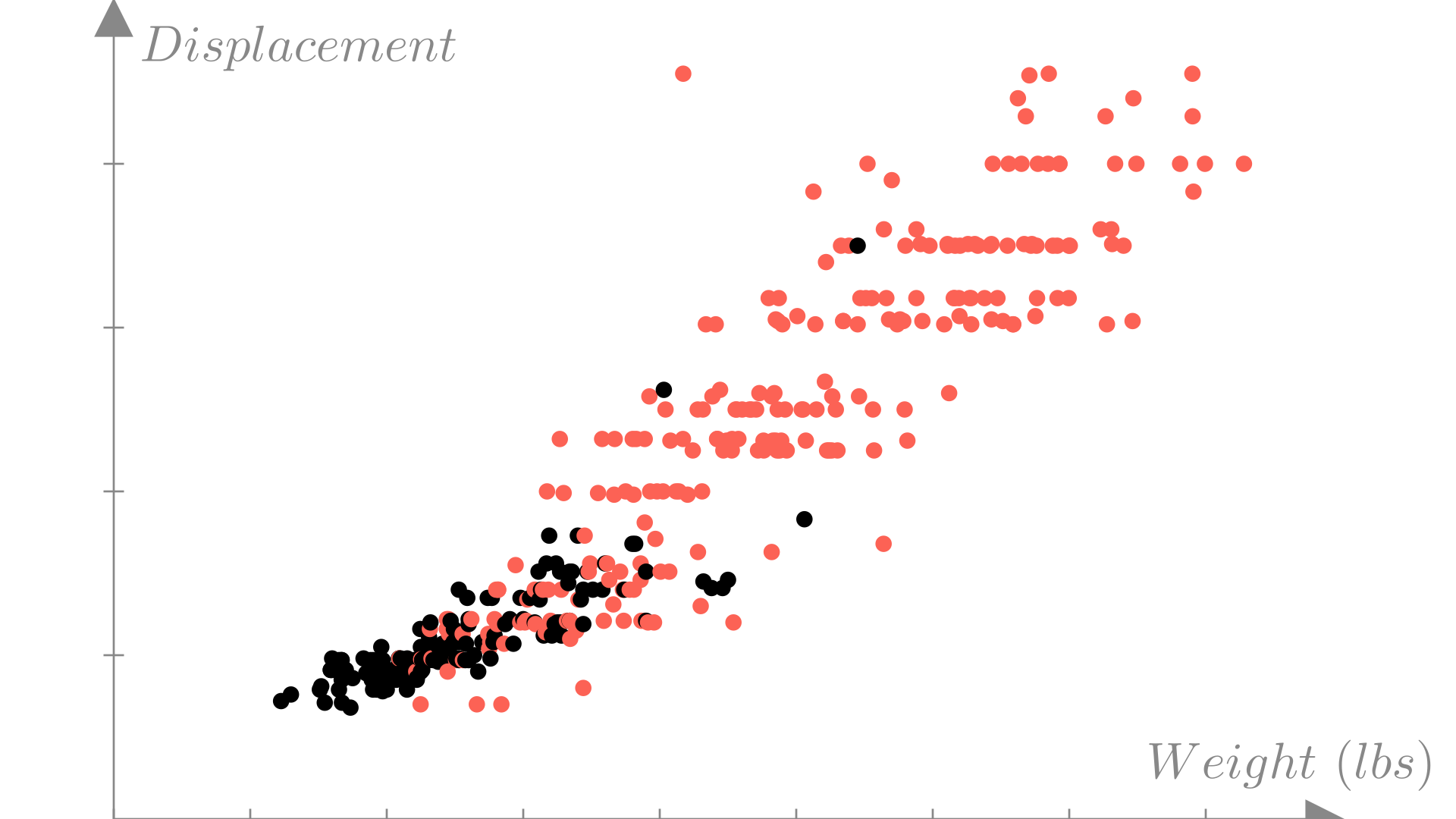

We can visualize which observations meet our target efficiency by again plotting weight against MPG and using colors to distinguish observations would have label \(1\) vs. label \(0\).

Manim Community v0.19.0

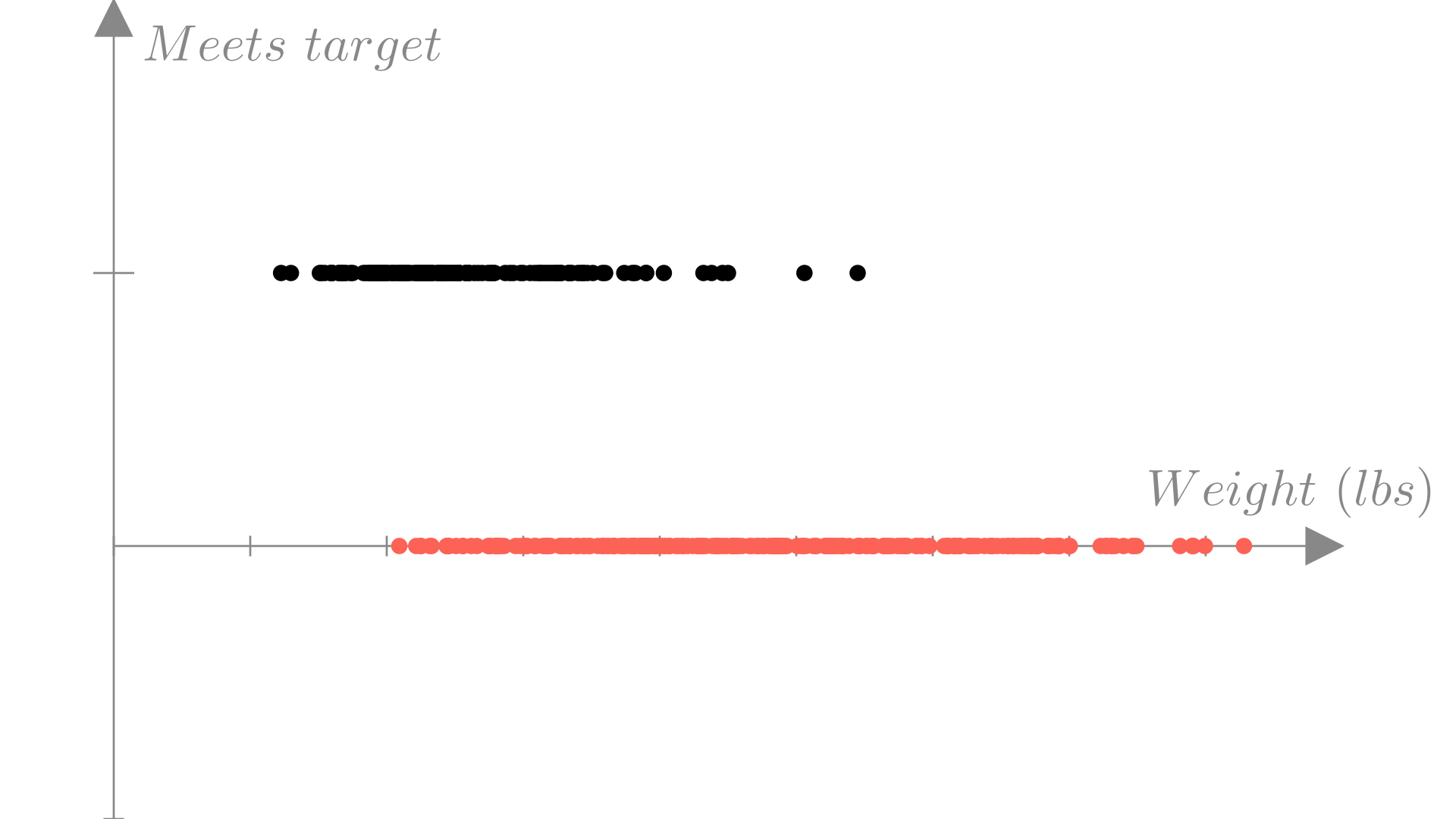

With this new output definition our dataset will look like:

\[ \text{Honda Accord: } \begin{bmatrix} \text{Weight:} & \text{2500 lbs} \\ \text{Horsepower:} & \text{ 123 HP} \\ \text{Displacement:} & \text{ 2.4 L} \\ \text{0-60mph:} & \text{ 7.8 Sec} \end{bmatrix} \longrightarrow \text{1 (Meets target)} \]

\[ \text{Dodge Aspen: } \begin{bmatrix} \text{Weight:} & \text{3800 lbs} \\ \text{Horsepower:} & \text{ 155 HP} \\ \text{Displacement:} & \text{ 3.2 L} \\ \text{0-60mph:} & \text{ 6.8 Sec} \end{bmatrix} \longrightarrow \text{0 (Does not meet target)} \]

\[ \vdots \quad \vdots \]

In this case, we’ve gotten rid of the \(MPG\) output variable and replaced it with a binary output \(y_i \in \{0, 1\}\). If we plot this version of the data, we can see more directly how this classification task differs from the regression task we saw in the last lecture.

Manim Community v0.19.0

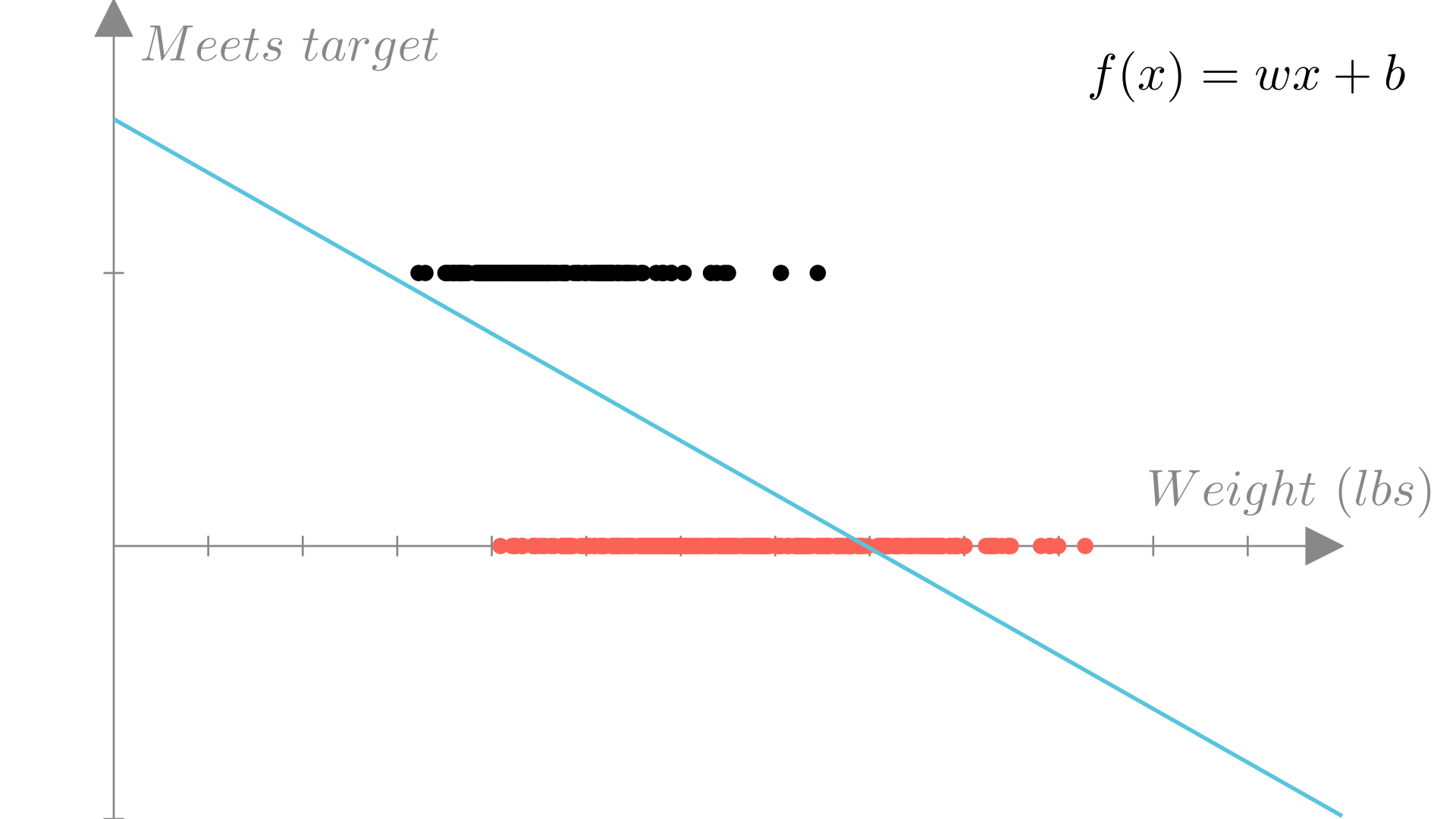

We could fit a linear regression model to our binary data, by simply treating the labels \(0\) and \(1\) as real-valued outputs. For our fuel economy example, such a model would look like this:

Manim Community v0.19.0

However, this doesn’t really address our problem. How do we interpret a prediction of \(-1\) or \(10\) or \(0.5\)?

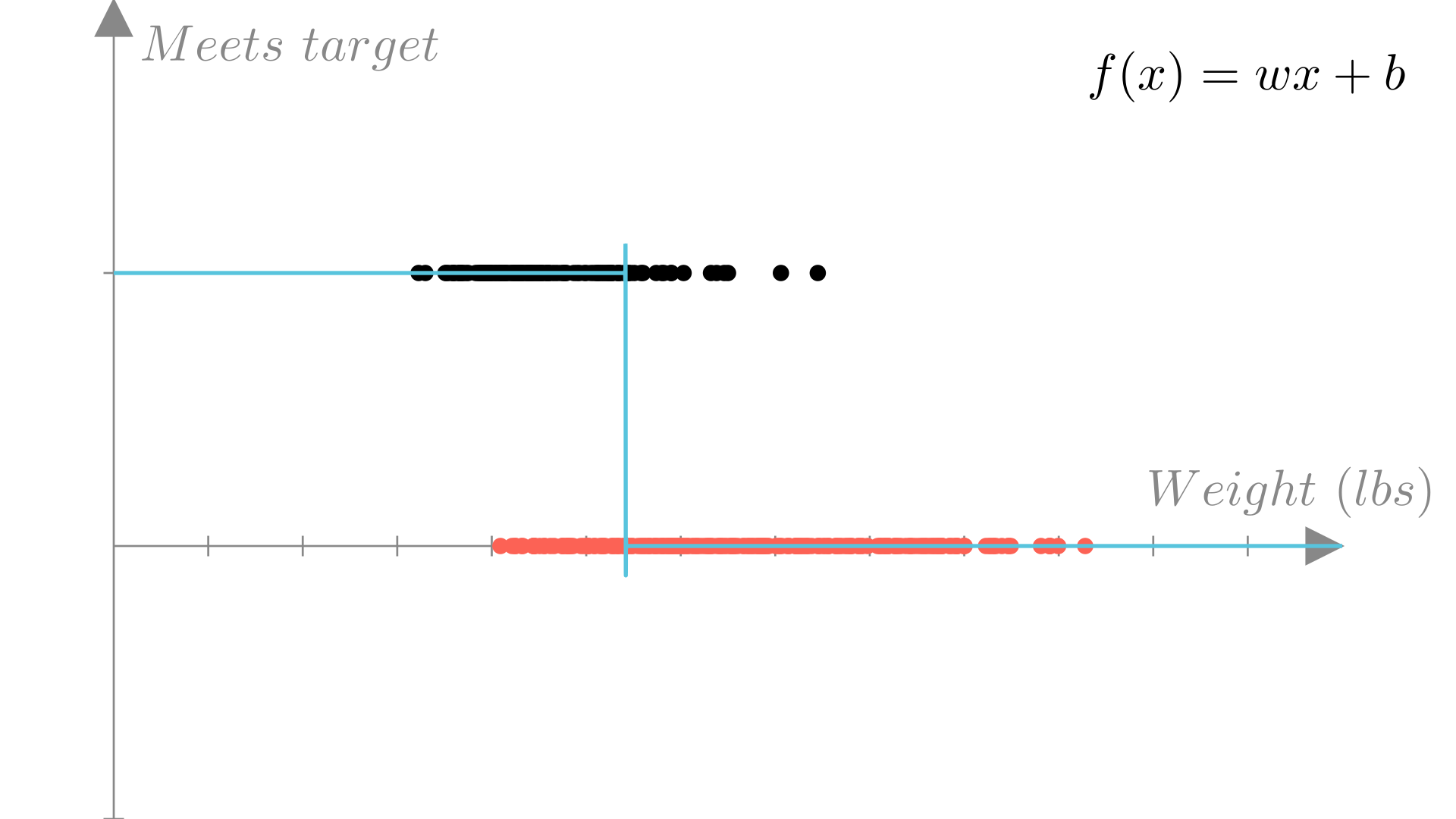

A more suitable prediction function would only output one of our two possible labels \(\{0, 1\}\). Fortunately, we can adapt our linear regression function in this way by defining a cutoff (typically 0), as follows:

\[ f(\mathbf{x})=\mathbf{x}^T\mathbf{w} \quad \longrightarrow \quad f(\mathbf{x})=\begin{cases} 1\ \text{ if }\ \mathbf{x}^T\mathbf{w} \geq 0 \\ 0\ \text{ if }\ \mathbf{x}^T\mathbf{w} < 0\end{cases} \]

We might also write this as:

\[ f(\mathbf{x}) = \mathbb{I}(\mathbf{x}^T\mathbf{w} \geq 0) \]

Where \(\mathbb{I}\) is an indicator function that is \(1\) if the boolean expression is true and \(0\) otherwise.

This gives us a prediction function that looks like step function in 1 dimension:

Manim Community v0.19.0

For our efficiency example, the binary prediction function can be written as:

\[ \text{Meets target} = f(\mathbf{x})= \]

\[ \big((\text{weight})w_1 + (\text{horsepower})w_2 + (\text{displacement})w_3 + (\text{0-60mph})w_4 + b\big) \geq 0 \]

Or in matrix notation:

\[ f(\mathbf{x})= \left( \begin{bmatrix} \text{Weight} \\ \text{Horsepower} \\ \text{Displacement} \\ \text{0-60mph} \\ 1 \end{bmatrix} \cdot \begin{bmatrix} w_1 \\ w_2\\ w_3 \\ w_4\\ b\end{bmatrix} \geq 0\right) \]

In this form we can see that the sign of each weight parameter determines whether the corresponding feature is more predictive of label \(1\) or \(0\) and to what extent. For instance, large positive weights indicate features that are very predictive of \(1\).

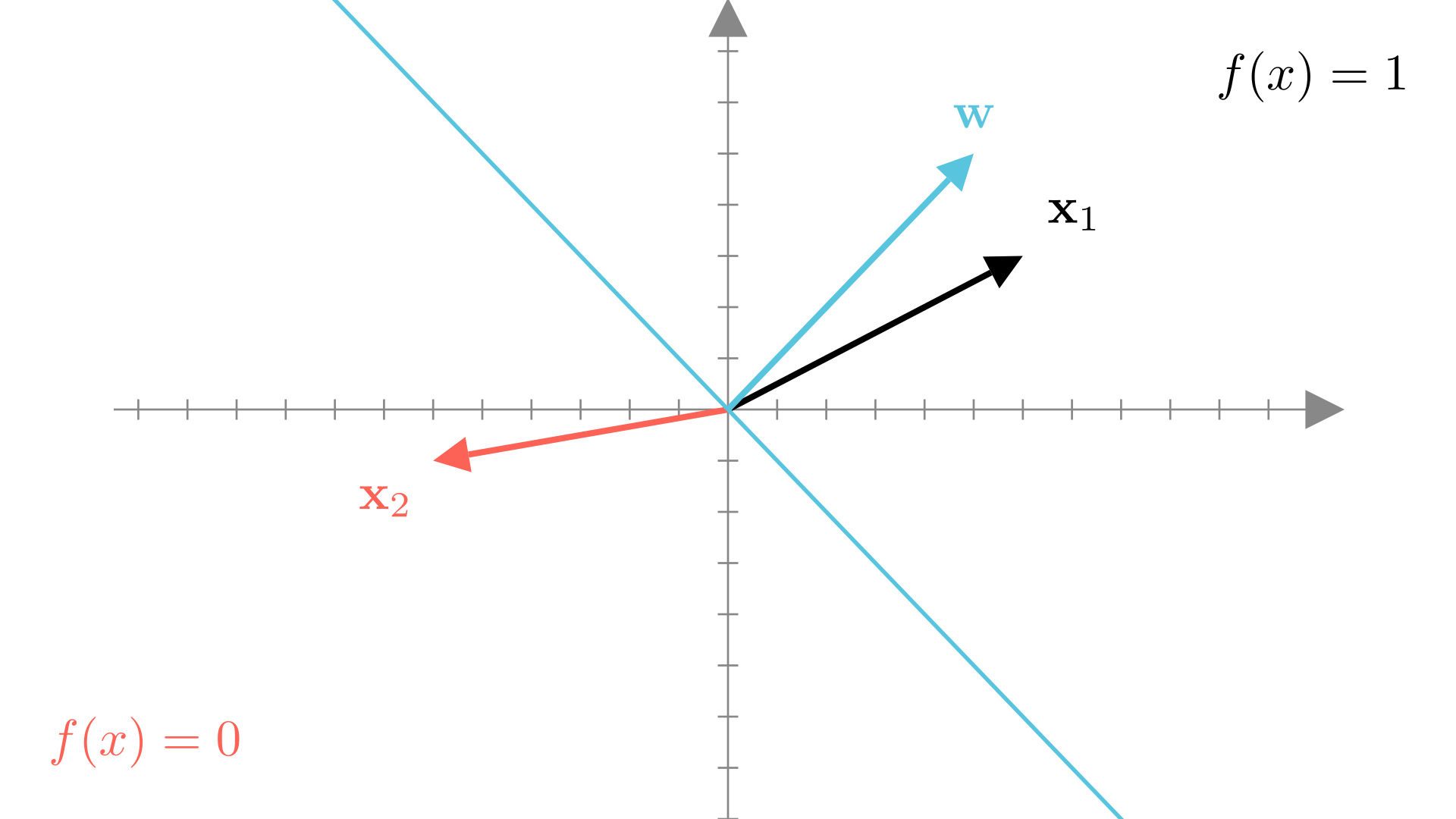

Our binary prediction function also has a geometric interpretation if we think of \(\mathbf{w}\) and \(\mathbf{x}\) as vectors. Reall that the dot product between the vectors \(\mathbf{x}\) and \(\mathbf{w}\) can be written as:

\[ \mathbf{x}^T\mathbf{w} = ||\mathbf{x}||_2 ||\mathbf{w}||_2 \cos \theta \]

Where \(\theta\) is the angle between the two vectors. If the angle between \(\mathbf{w}\) and \(\mathbf{x}\) is in the range \([-\frac{\pi}{2}, \frac{\pi}{2}]\) (or \([-90^o, 90^o]\) in degrees), then the prediction will be \(1\), otherwise it will be 0.

Manim Community v0.19.0

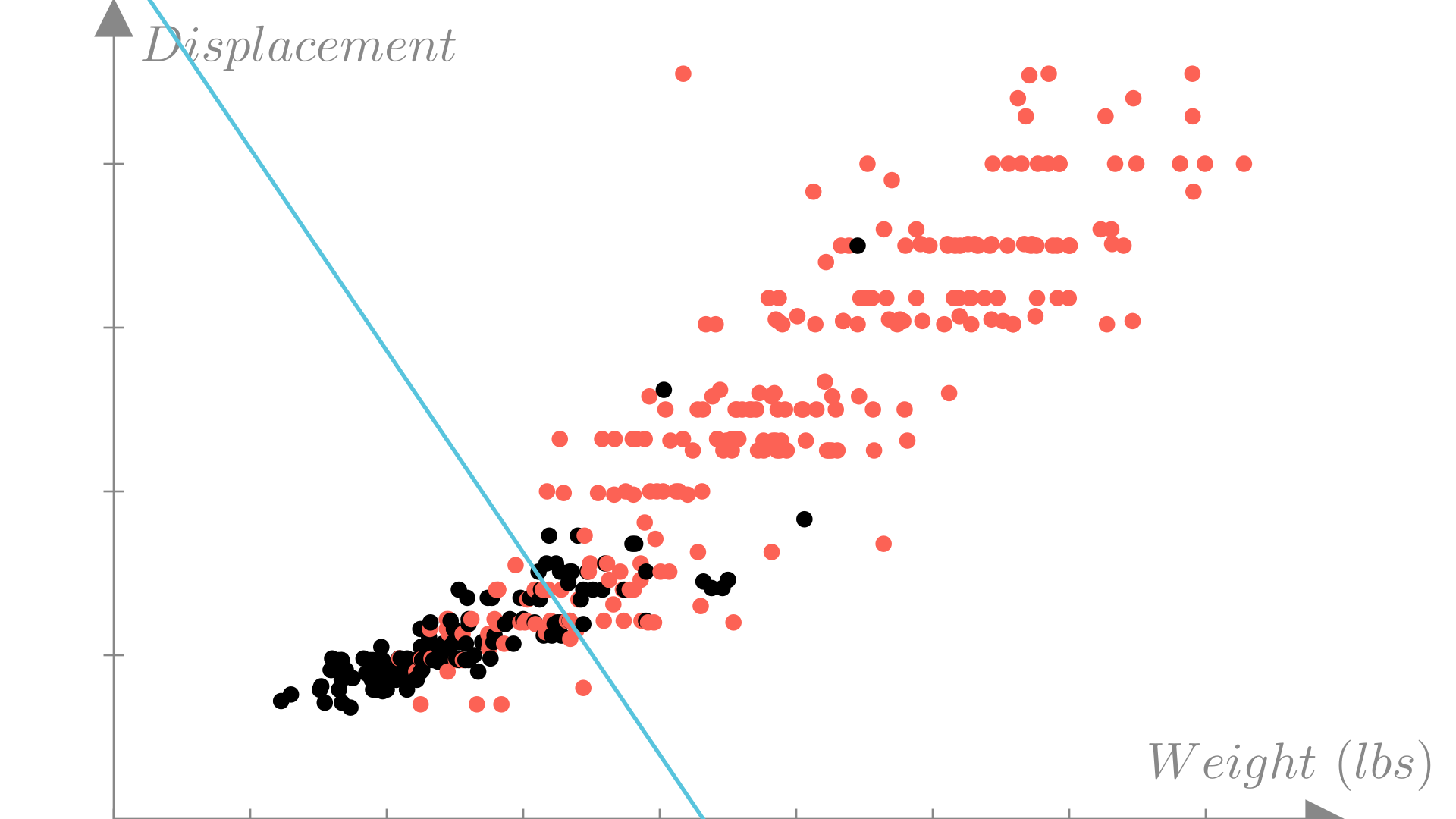

The blue line in the figure above is the set of points such that:

\[ \mathbf{x}^T \mathbf{w} = 0 \]

thus it represents the boundary between the regions where \(1\) and \(0\) predictions are made. By definition, it is perpendicular to the direction of \(\mathbf{w}\).

We can visualize a classification dataset as a function of two variables using color to distinguish between observations with each label. In this example we’ll look at weight and engine displacement.

Manim Community v0.19.0

For a binary classification model the decision boundary is the border between regions of the input space corresponding to each prediction that we saw in the previous section. For a linear classification model the decision boundary is line or plane:

\[\mathbf{x}^T\mathbf{w}=0\]

Here we’ll plot the decision boundary in the input space and color code observations by the predicted label.

Manim Community v0.19.0

A natural measure for error for binary classifiers is accuracy. The accuracy of a prediction function is the fraction of observations where the prediction matches the true output:

\[ \textbf{Accuracy: }\quad \frac{\text{\# of correct predictions}}{\text{Total predictions}} \]

We can write this in terms of our prediction function as:

\[ \textbf{Accuracy} = \frac{1}{N} \sum_{i=1}^N \mathbb{I}\big(f(\mathbf{x}_i) = y_i\big) \]

Below we can plot the decision boundary compared to the true outputs and calculate the accuracy of our predictions.

Accuracy: 0.8291Manim Community v0.19.0

In the last lecture we saw that we can find an optimal choice of parameters \(\mathbf{w}\) for a linear regression model by defining a measure of error or loss for our approximation on our dataset and minimizing that error as a function of \(\mathbf{w}\), either directly or with gradient descent.

\[ \mathbf{w}^* = \underset{\mathbf{w}}{\text{argmin}} \ \mathbf{Loss}(\mathbf{w}) \]

Gradient descent update:

\[ \mathbf{w}^{(k+1)} \quad \longleftarrow \quad \mathbf{w}^{(k)} - \alpha \nabla_{\mathbf{w}} \mathbf{Loss}(\mathbf{w}) \]

We might consider using (negative) accuracy as a loss function or the same mean squared error that we used for linear regression. However, if we tried to minimize one of these losses with gradient descent, we would run into a fundamental problem: the derivative of the indicator function is always \(0\), meaning gradient descent will never update our model.

To get around this problem, we need to turn back to our maximum likelihood estimation approach.

The Bernoulli distribution is a probability distribution over two possible outcomes. It is often thought of as the distribution of a coin flip, where the probability of heads is defined by a parameter \(q\) in the range \([0,1]\).

\[ \text{Probability of }\textbf{heads: } \ \ q, \quad \text{Probability of }\textbf{tails: } 1-q \]

Again we typically use \(0\) and \(1\) to denote the two possible outcomes, so we can write the probability mass function (or likelihood) of the Bernoulli distribution as:

\[ p(y)=\begin{cases} q\quad\ \ \ \ \ \ \ \text{if }\ y=1\\ 1-q\quad \text{if }\ y=0\\ \end{cases}\quad q\in[0,1],\ y\in\{0, 1\} \]

Using the fact that \(y\) can only be \(0\) or \(1\), we can write this more compactly as:

\[ p(y) = q^y(1-q)^{1-y} \]

Recall that the probability mass function tells us the probability of any outcome under our distribution. We can write the log probability mass function as:

\[ \log p(y) = y\log q + (1-y)\log(1-q) \]

In the previous lecture we saw that we could define a probabilistic model for outcomes given inputs by making an strong assumption about how the observed outputs were generated. In particular, we assumed that each \(y_i\) was sampled from a Normal distribution where the mean was a linear function of the input \(\mathbf{x}_i\).

\[ y_i \sim \mathcal{N}(\mathbf{x}_i^T\mathbf{w},\ \sigma^2) \]

Given everything we’ve seen, we might want to do the same for binary outputs by defining a probabilistic model where each binary label $y$_i$ is drawn from a Bernoulli where \(q\) is a linear function of \(\mathbf{x}_i\). Unfortunately \(q\) needs to be restricted to the interval \([0,1]\) and a linear function can make no such guarantee about its output.

\[ \mathbf{x}^T\mathbf{w}\notin [0, 1] \quad \longrightarrow \quad y_i \sim \mathbf{Bernoulli}(\mathbf{ q=? })\quad \]

However, if we had a way to map the outputs of our linear function into the range \([0,1]\), we could define such a model. This means we need a function of the form:

\[ \textbf{Need }\ g(x):\ \mathbb{R} \longrightarrow [0,1] \]

\[ \textbf{Input: } x \in \mathbb{R} \longrightarrow \textbf{Output: } y \in [0,1] \]

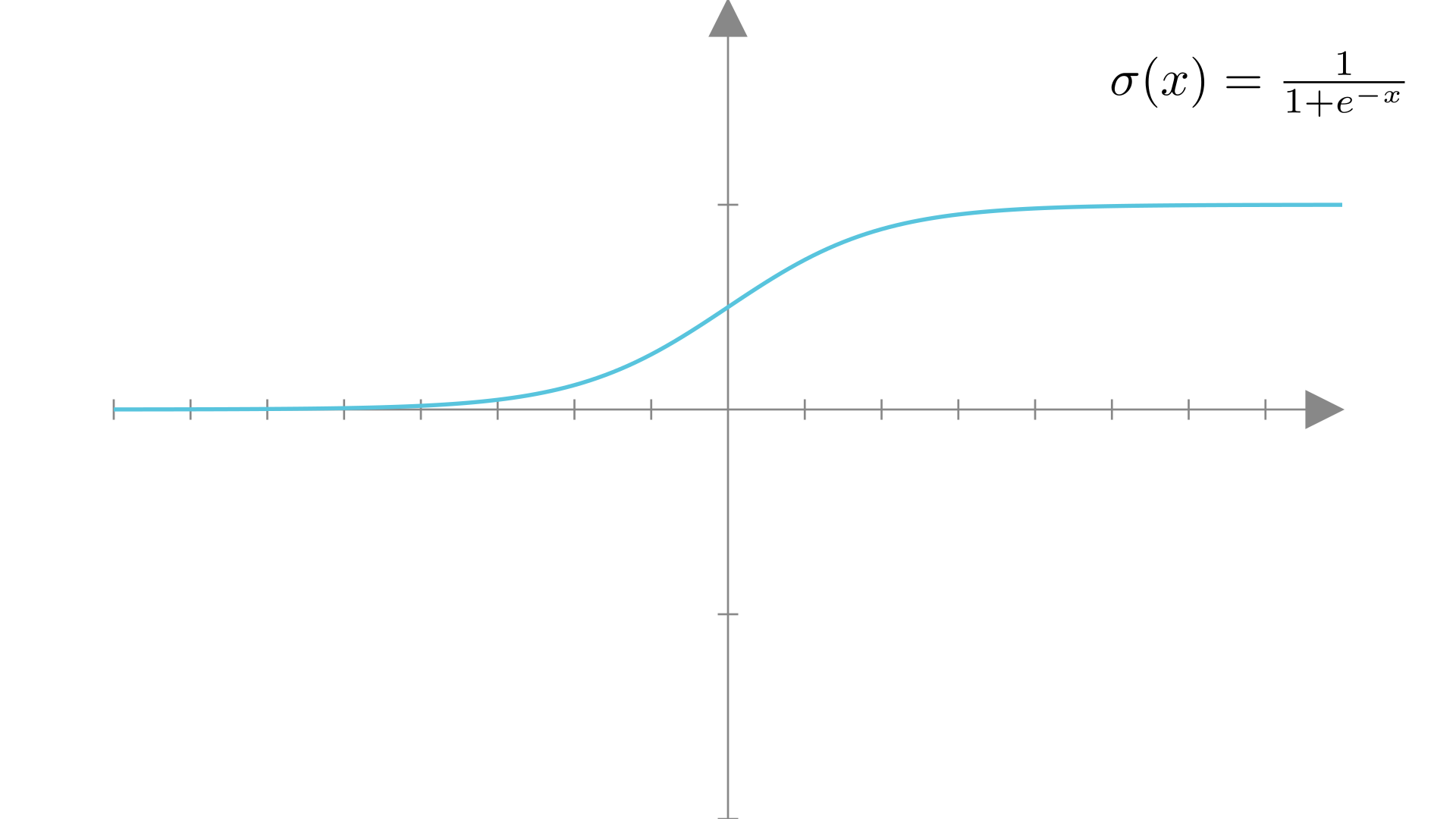

The sigmoid (or logistic) function is exactly such a function.

\[ \sigma(x) = \frac{1}{1+e^{-x}} \]

Manim Community v0.19.0

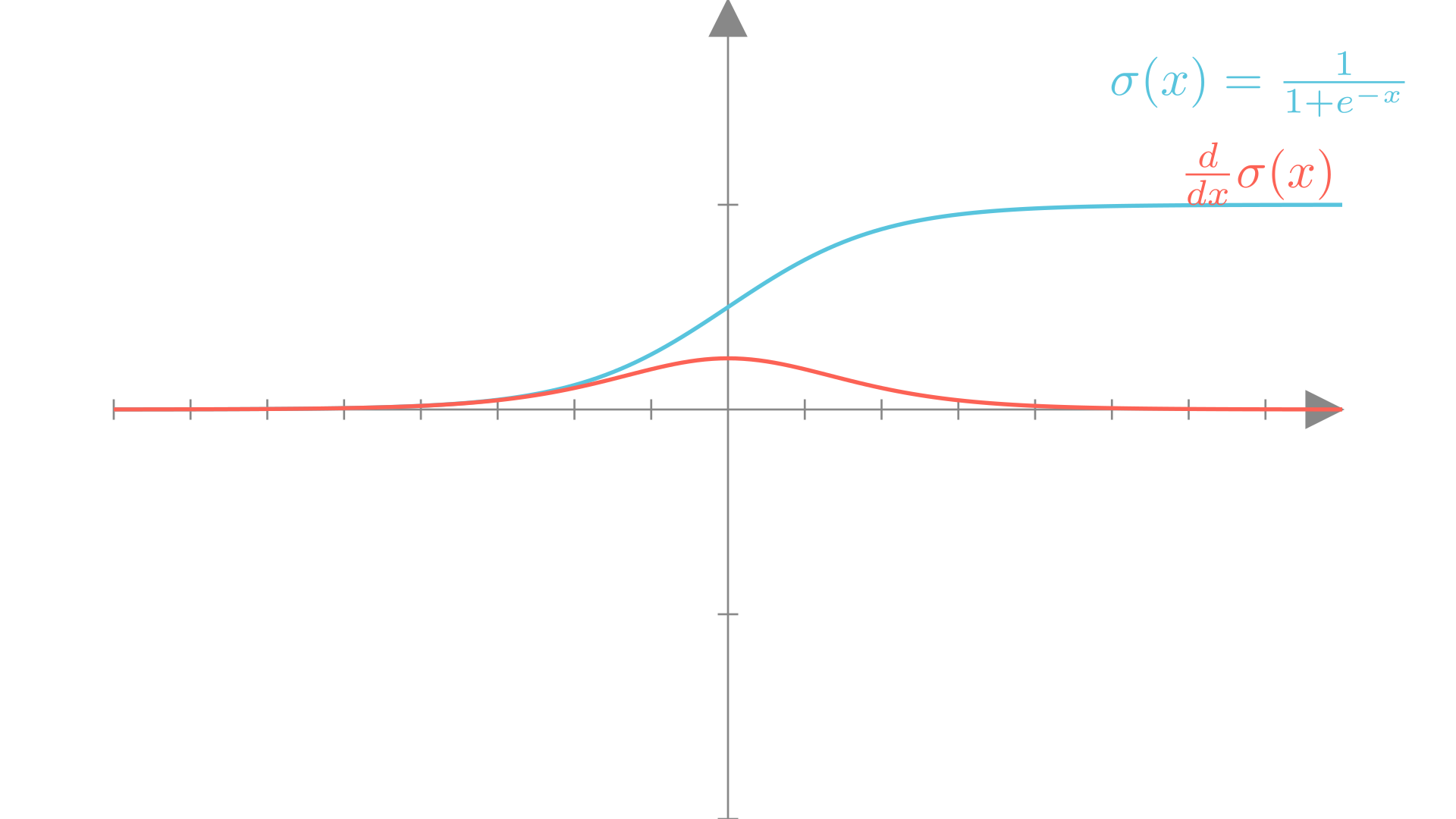

This “S”-shaped function squashes any real number into the range \([0,1]\). The sigmoid function has a number of other nice properties. It is smooth, monotonic and differentiable. It’s derivative has a convenient form that can be written in terms of the sigmoid function itself.

\[ \frac{d}{dx}\sigma(x) = \sigma(x)\big(1-\sigma(x)\big) \]

Manim Community v0.19.0

It’s particularly useful for modeling probabilities because:

\[ \sigma(0) = 0.5 \]

and

\[ 1-\sigma(x) = \sigma(-x) \]

With the sigmoid as our mapping function, we can now define our linear probabilistic model for binary classification as:

\[ y_i \sim \mathbf{Bernoulli}\big(\mathbf{ \sigma(\mathbf{x}_i^T\mathbf{w} })\big) \]

Using this definition, we can easily write out the probability of each output given the input \((\mathbf{x}_i)\) and model parameters \((\mathbf{w})\).

\[ p(y_i = 1\mid \mathbf{x}_i, \mathbf{w}) = \sigma(\mathbf{x}_i^T\mathbf{w}), \quad p(y_i=0\mid \mathbf{x}_i, \mathbf{w})=1-\sigma(\mathbf{x}_i^T\mathbf{w})=\sigma(-\mathbf{x}_i^T\mathbf{w}) \]

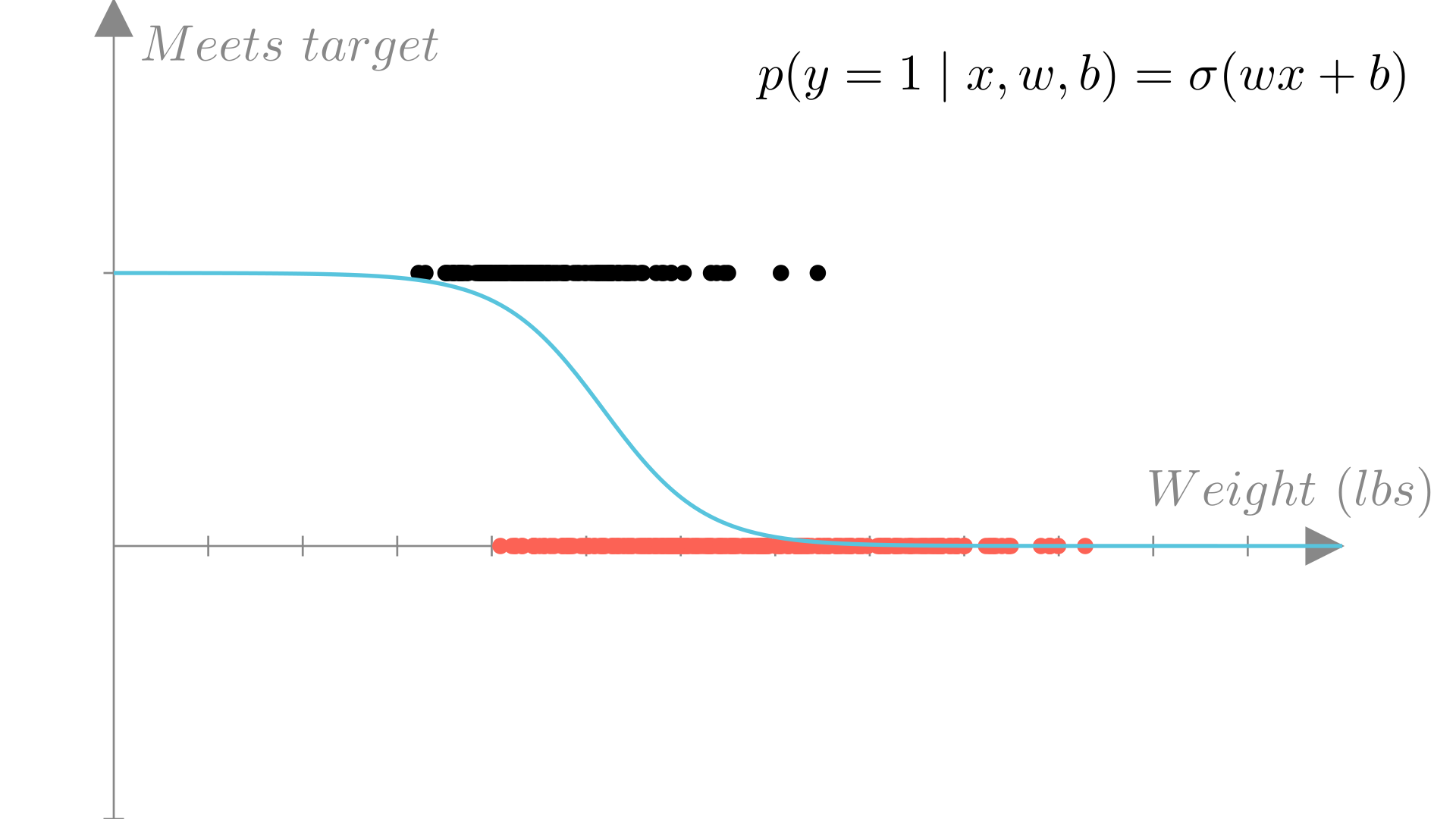

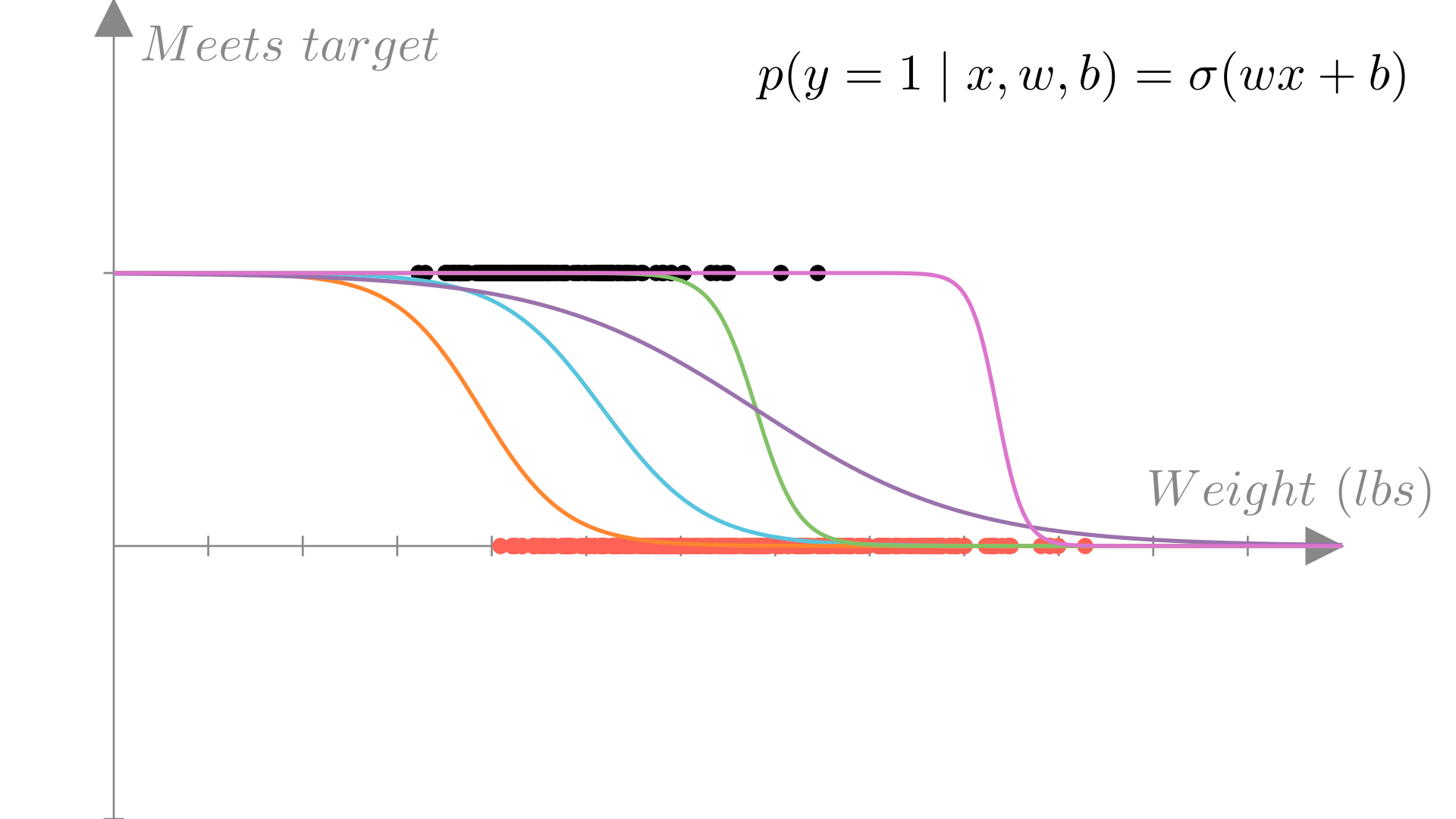

For our fuel efficiency example, we can plot the predicted probability that our target is met, \(p(y=1\mid \mathbf{x}, \mathbf{w})\) under our model as a function of the input (in this case weight). We see that the result is again an s-curve.

Manim Community v0.19.0

We call this probabilistic model for binary outputs: logistic regression.

When we’re making predictions we typically don’t want to sample an output, we want to make a definite prediction. In this case either \(0\) or \(1\). A reasonable way to do this is to simply predict the output that is most likely under our model:

\[ \textbf{Prediction function: } f(\mathbf{x}) = \begin{cases}1 \ \text{if } p(y=1\mid\mathbf{x}, \mathbf{w}) \geq p(y=0\mid\mathbf{x}, \mathbf{w}) \\ 0 \text{ otherwise} \end{cases} \]

Since there’s only two possible outcomes, this is equivalent to checking if the probability of class \(1\) is greater than 50%. \[p(y=1\mid \mathbf{x}, \mathbf{w}) =\sigma(\mathbf{x}^T\mathbf{w})\geq 0.5\]

Since \(\sigma(0) =0.5\), we see that this is equivalent to the decision rule for classification we defined earlier!

\[ p(y_i=1)\geq 0.5 \quad \longrightarrow \quad \mathbf{x}^T\mathbf{w}\geq 0 \]

Now that we’ve setup our model, we can look at how to find the optimal \(\mathbf{w}\) using the principle of maximum likelihood estimation.

Manim Community v0.19.0

Recall that the maximum likelihood estimate of our parameter \(\mathbf{w}\) is the choice of \(\mathbf{w}\) that maximizes the (conditional) probability of the data we observed under our model

\[ \mathbf{w}^* = \underset{\mathbf{w}}{\text{argmax}} \ p(\mathbf{y} \mid \mathbf{X}, \mathbf{w}) =\underset{\mathbf{w}}{\text{argmax}} \ p(y_1,...,y_N \mid \mathbf{x}_1, ...,\mathbf{x}_N, \mathbf{w}) \]

Again, our model also assumes conditional independence across observations so:

\[ p(y_1,...,y_N \mid \mathbf{x}_1, ...,\mathbf{x}_N, \mathbf{w}) = \prod_{i=1}^N p(y_i\mid \mathbf{x}_i, \mathbf{w}) \]

For convenience, it is typical to frame the optimal value in terms of the negative log-likelihood rather than the likelihood, but the two are equivalent.

\[ \underset{\mathbf{w}}{\text{argmax}} \prod_{i=1}^N p(y_i\mid \mathbf{x}_i, \mathbf{w}) = \underset{\mathbf{w}}{\text{argmin}} - \sum_{i=1}^N \log p(y_i \mid \mathbf{x}_i, \mathbf{w}) = \textbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y}) \]

Thus, the negative log-likelihood is a natural loss function to optimize to find \(\mathbf{w}^*\).

\[ \textbf{Loss}(\mathbf{w}) =\textbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y})=- \sum_{i=1}^N \log p(y_i \mid \mathbf{x}_i, \mathbf{w}) \]

We can now write out the negative log-likelihood for our logistic regression model using the Bernoulli PMF we defined above

\[ \mathbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y}) = -\sum_{i=1}^N \bigg[ y_i\log \sigma(\mathbf{x}_i^T\mathbf{w}) + (1-y_i)\log(1-\sigma(\mathbf{x}_i^T\mathbf{w})) \bigg] \]

Using our knowledge of the sigmoid function, we can write this even more compactly:

\[ \mathbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y}) =-\sum_{i=1}^N \bigg[ y_i\log \sigma(\mathbf{x}_i^T\mathbf{w}) + (1-y_i)\log \sigma(-\mathbf{x}_i^T\mathbf{w}) \bigg] \]

\[ = -\sum_{i=1}^N \log\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \]

Note that \(2y_i-1\) is \(1\) if \(y_i=1\) and is \(-1\) if \(y_i=0\).

For our logistic regression model, maximum likelihood is intuitive. In the ideal case our model would always predict the correct class with probability 1.

\[ \textbf{Best case scenerio: } p(y_i\mid \mathbf{x}_i, \mathbf{w})=1, \quad \forall i \in \{1,...,N\} \]

This is generally not possible though due to the constraints of our linear function.

We can also write the negative log-likelihood compactly using matrix-vector notation.

\[ \mathbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y}) = -\mathbf{y}^T\log \sigma(\mathbf{X}\mathbf{w}) - (1-\mathbf{y})^T\log \sigma(-\mathbf{X}\mathbf{w}) \]

It’s worth noting that in neural network literature, this loss is often called the binary cross-entropy loss.

As we saw with linear regression, we can find the optimal paramters \(\mathbf{w}^*\) under this loss function using gradient descent:

\[

\mathbf{w}^{(i+1)} \leftarrow \mathbf{w}^{(i)} - \alpha \nabla_{\mathbf{w}} \mathbf{NLL}(\mathbf{w}^{(i)}, \mathbf{X}, \mathbf{y})

\]

To use this, we first need to derive the gradient of the negative log-likelihood with respect to \(\mathbf{w}\). We’ll start by writing out the simplest version of the NLL that we saw above:

\[ \mathbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y}) = -\sum_{i=1}^N \log\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \]

\[ \nabla_{\mathbf{w}}\mathbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y}) = \frac{d}{d\mathbf{w}}-\sum_{i=1}^N \log\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \]

As a first step, recall that the addition rule tells us that the derivative of a sum is a sum of derivatives:

\[ = -\sum_{i=1}^N \frac{d}{d\mathbf{w}} \log\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \]

Next we’ll apply the chain rule to the \(\log\) function, remembering that \(\frac{d}{dx} \log x = \frac{1}{x}\):

\[ = -\sum_{i=1}^N \bigg(\frac{1}{ \sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) }\bigg)\frac{d}{d\mathbf{w}} \sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \]

Then we can apply the chain rule to the sigmoid function, using the fact that \(\frac{d}{dx} \sigma(x)=\sigma(x)(1-\sigma(x))\):

\[ = -\sum_{i=1}^N \bigg(\frac{1}{ \sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) } \bigg) \bigg(\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big)\bigg) \bigg(1-\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \bigg) \frac{d}{d\mathbf{w}}\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \]

We now see that the first 2 terms cancel!

\[ = -\sum_{i=1}^N \bigg(1-\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \bigg) \frac{d}{d\mathbf{w}}\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \]

Finally we’re left with the gradient of a linear function, which is just:

\[\frac{d}{d\mathbf{w}}\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big)=(2y_i-1)\mathbf{x}_i\]

Note that the transpose is irrelevant as we’re no longer signifying a dot-product and \(\mathbf{x}_i\) is just a vector. So finally we’re left with

\[ \nabla_{\mathbf{w}}\mathbf{NLL}(\mathbf{w}, \mathbf{X}, \mathbf{y}) = -\sum_{i=1}^N \bigg(1-\sigma\big((2y_i-1)\mathbf{x}_i^T\mathbf{w}\big) \bigg) \bigg((2y_i-1)\mathbf{x}_i \bigg) \]

MathJax = {

const MathJax = await require('mathjax@2.7.5/MathJax.js?config=TeX-MML-AM_CHTML')

.catch(() => window.MathJax)

// configure MathJax

MathJax.Hub.Config({

tex2jax: {inlineMath: [['$','$'], ['\\(','\\)']]},

displayMath: [ ['$$','$$'], ["\\[","\\]"] ],

processEscapes: true

})

return MathJax

}

Plotly = require("https://cdn.plot.ly/plotly-latest.min.js");

tfbase = require('@tensorflow/tfjs@4.11.0')

pyodide = {

const p =

await require("https://cdn.jsdelivr.net/pyodide/v0.22.1/full/pyodide.js");

console.log(p);

return p.loadPyodide();

}

PyScope = function() {

let scope = pyodide.toPy({});

let py = async (strings, ...expressions) => {

let globals = {};

const code = strings.reduce((result, string, index) => {

if (expressions[index]) {

const name = `x${index}`;

globals[name] = expressions[index];

return result + string + name;

}

return result + string;

}, '');

await pyodide.loadPackagesFromImports(code);

scope.update(pyodide.globals);

const result = await pyodide.runPythonAsync(

code,

{

globals: scope

}

);

if (result?.t2Js) return result.t2Js();

if (result?.toJs) return result.toJs();

return result;

};

return py;

}

py = {

let testscope = PyScope();

let py = async (strings, ...expressions) => {

let globals = {};

const code = strings.reduce((result, string, index) => {

if (expressions[index]) {

const name = `x${index}`;

globals[name] = expressions[index];

return result + string + name;

}

return result + string;

}, '');

await pyodide.loadPackagesFromImports(code);

pyodide.globals.update(pyodide.toPy(globals))

const result = await pyodide.runPythonAsync(

code,

{globals: pyodide.globals}

);

if (result?.t2Js) return result.t2Js();

if (result?.toJs) return result.toJs();

return result;

};

const sigmoidGradConfig = {

kernelName: tfbase.Sigmoid,

inputsToSave: ['x'],

gradFunc: (dy, saved) => {

const [x] = saved;

const y = tfbase.sigmoid(x);

return {x: () => tfbase.mul(dy, tfbase.mul(y, tfbase.sub(tfbase.scalar(1), y)))};

}

};

tfbase.registerGradient(sigmoidGradConfig);

const tanhGradConfig = {

kernelName: tfbase.Tanh,

inputsToSave: ['x'],

gradFunc: (dy, saved) => {

const [x] = saved;

const y = tfbase.tanh(x);

return {x: () => tfbase.mul(tfbase.sub(tfbase.scalar(1), tfbase.square(y)), dy)};

}

};

tfbase.registerGradient(tanhGradConfig);

const expGradConfig = {

kernelName: tfbase.Exp,

inputsToSave: ['x'],

gradFunc: (dy, saved) => {

const [x] = saved;

const y = tfbase.exp(x);

return {x: () => tfbase.mul(dy, y)};

}

};

tfbase.registerGradient(expGradConfig);

function dispatchEvent(element){

element.dispatchEvent(new Event("input", {bubbles: true}));

}

pyodide.globals.update(pyodide.toPy({Plotbase: Plot, tfbase: tfbase, Plotlybase: Plotly, dispatchEvent: dispatchEvent, d3base: d3}))

await py`

from pyodide.ffi import create_once_callable

from types import SimpleNamespace

from pyodide.ffi import to_js

from js import Object, document

import pandas

import numpy as np

tfbase = SimpleNamespace(**tfbase)

def convert_tensor(a, *args):

if isinstance(a, Parameter):

a = a.value.value

if isinstance(a, Tensor):

a = a.value

if isinstance(a, np.ndarray):

a = a.tolist()

return to_js(a)

def convert(a):

if isinstance(a, Parameter):

a = a.value.value

if isinstance(a, Tensor):

a = a.value

if isinstance(a, np.ndarray):

a = a.tolist()

return to_js(a, dict_converter=Object.fromEntries, default_converter=convert_tensor)

def convert_start(shape, start):

start = start or 0

if start < 0:

start = shape + start

start = min(start, shape - 1)

return start

def convert_end(shape, start, end):

start = convert_start(shape, start)

if end is None:

end = shape

else:

end = convert_start(shape, end)

return end - start

class Tensor:

keepall = False

class Keep:

def __enter__(self):

self.value = Tensor.keepall

Tensor.keepall = True

def __exit__(self, *args):

Tensor.keepall = self.value

def __init__(self, *args, value=None, keep=None, **kwargs):

if keep is None:

self.keep = Tensor.keepall

else:

self.keep = keep

if not (value is None):

self.value = value

elif len(args) and isinstance(args[0], Tensor):

self.value = tfbase.add(args[0].value, 0)

elif len(args) and args[0] is None:

self.value = tfbase.tensor(0.)

else:

args = [convert(a) for a in args]

kwargs = {k: convert(a) for (k, a) in kwargs.items()}

self.value = tfbase.tensor(*args, **kwargs)

def __getattr__(self, name):

if name == 'T':

return self.transpose()

attr = getattr(self.value, name)

if callable(attr):

def run(*args, **kwargs):

args = [convert(a) for a in args]

kwargs = {k: convert(a) for (k, a) in kwargs.items()}

output = attr(*args, **kwargs)

return Tensor(value=output)

# Prevent premature garbage collection

run._ref = self

return run

return attr

def __add__(a, b):

return Tensor(value=tfbase.add(convert(a), convert(b)))

def __radd__(a, b):

return Tensor(value=tfbase.add(convert(b), convert(a)))

def __sub__(a, b):

return Tensor(value=tfbase.sub(convert(a), convert(b)))

def __rsub__(a, b):

return Tensor(value=tfbase.sub(convert(b), convert(a)))

def __mul__(a, b):

return Tensor(value=tfbase.mul(convert(a), convert(b)))

def __rmul__(a, b):

return Tensor(value=tfbase.mul(convert(b), convert(a)))

def __truediv__(a, b):

return Tensor(value=tfbase.div(convert(a), convert(b)))

def __rtruediv__(a, b):

return Tensor(value=tfbase.div(convert(b), convert(a)))

def __floordiv__(a, b):

return Tensor(value=tfbase.floorDiv(convert(a), convert(b)))

def __rfloordiv__(a, b):

return Tensor(value=tfbase.floorDiv(convert(b), convert(a)))

def __pow__(a, b):

return Tensor(value=tfbase.pow(convert(a), convert(b)))

def __rpow__(a, b):

return Tensor(value=tfbase.pow(convert(b), convert(a)))

def __neg__(a):

return Tensor(value=tfbase.neg(convert(a)))

def __eq__(a, b):

return Tensor(value=tfbase.equal(convert(a), convert(b)))

def __neq__(a, b):

return Tensor(value=tfbase.notEqual(convert(a), convert(b)))

def __lt__(a, b):

return Tensor(value=tfbase.less(convert(a), convert(b)))

def __gt__(a, b):

return Tensor(value=tfbase.greater(convert(a), convert(b)))

def __leq__(a, b):

return Tensor(value=tfbase.lessEqual(convert(a), convert(b)))

def __geq__(a, b):

return Tensor(value=tfbase.greaterEqual(convert(a), convert(b)))

def __del__(self):

if hasattr(self.value, 'dispose') and not self.keep:

self.value.dispose()

def __iter__(self):

for x in self.value.arraySync():

yield Tensor(x)

def __getitem__(self, args):

tosqueeze = []

starts, ends, steps = [], [], []

value = self

if not (type(args) is tuple):

args = (args,)

for ind in range(len(args)):

if args[ind] is Ellipsis:

start = args[:ind]

rest = args[(ind + 1):]

args = start + tuple([slice(None)] * (len(self.value.shape) - (len(start) + len(rest)))) + rest

break

for i, (shape, dim) in enumerate(zip(self.value.shape, args)):

if isinstance(dim, slice):

starts.append(dim.start or 0)

ends.append(dim.stop or shape)

steps.append(dim.step or 1)

elif Tensor(dim).shape:

t = Tensor(dim)

if t.value.dtype == 'bool':

inds = [ind for (ind, e) in enumerate(t.value.arraySync()) if e]

inds = tf.cast(tf.reshape(Tensor(inds), [-1]), 'int32')

value = tf.gather(value, inds, i)

else:

inds = tf.cast(tf.reshape(t, [-1]), 'int32')

value = tf.gather(value, inds, i)

else:

starts.append(dim)

ends.append(dim + 1)

steps.append(1)

tosqueeze.append(i)

value = tf.stridedSlice(value, convert(starts), convert(ends), convert(steps))

if len(tosqueeze) > 0:

value = tf.squeeze(value, tosqueeze)

return value

def t2Js(self):

return to_js(self.value.arraySync())

class wrapper:

def __init__(self, f):

self.f = f

def __call__(self, x, *args, **kwargs):

with Tensor.Keep():

return convert(self.f(Tensor(value=x), *args, **kwargs))

class grad:

def __init__(self, f):

self.f = f

self.wrapper = wrapper(f)

def __call__(self, x, *args, **kwargs):

output = tfbase.grad(create_once_callable(self.wrapper))(x.value, *args, **kwargs)

return Tensor(value=output)

class wrappers:

def __init__(self, f):

self.f = f

def __call__(self, *args):

with Tensor.Keep():

wrapped_args = [Tensor(value=x) for x in args]

return convert(self.f(*wrapped_args))

class grads:

def __init__(self, f):

self.f = f

self.wrapper = wrappers(f)

def __call__(self, *args):

output = tfbase.grads(create_once_callable(self.wrapper))(to_js([arg.value for arg in args]))

return [Tensor(value=x) for x in output]

tf = Tensor(value=tfbase)

Plotbase = SimpleNamespace(**Plotbase)

Plotlybase = SimpleNamespace(**Plotlybase)

d3base = SimpleNamespace(**d3base)

def meshgrid(*args):

return tuple([Tensor(value=a) for a in tfbase.meshgrid(*[convert(arg) for arg in args])])

tf.meshgrid = meshgrid

def default_convert(obj, default_f, other):

if isinstance(obj, Tensor):

obj = obj.t2Js()

if isinstance(obj, pandas.DataFrame):

obj = obj.to_dict('records')

return default_f(obj)

def plotconvert(a):

return to_js(a, dict_converter=Object.fromEntries, default_converter=default_convert)

class PlotWrapper:

def __init__(self, base=None):

self.base = base

def __getattr__(self, name):

attr = getattr(self.base, name)

if callable(attr):

def run(*args, **kwargs):

args = [plotconvert(a) for a in args]

kwargs = {k: plotconvert(a) for (k, a) in kwargs.items()}

return attr(*args, **kwargs)

return run

return attr

Plot = PlotWrapper(Plotbase)

Plotly = PlotWrapper(Plotlybase)

d3 = PlotWrapper(d3base)

def PlotlyFigure(width=800, height=None, hide_toolbar=True, overlay=True):

if height is None:

height = 0.75 * width

width, height = int(width), int(height)

container = document.createElement('div')

container.style.width = str(width) + 'px'

container.style.height = str(height) + 'px'

lineplot = document.createElement('div')

lineplot.classList.add("plotlydiv")

if hide_toolbar:

container.classList.add("hidetoolbar")

container.append(lineplot)

if overlay:

overlay = document.createElement('div')

overlay.classList.add("plotlyoverlay")

container.append(overlay)

container.style.position = 'relative'

overlay.style.top = '0'

overlay.style.bottom = '0'

overlay.style.width = '100%'

overlay.style.position = 'absolute'

return container

def PlotlyInput(width=800, height=None, hide_toolbar=True, sync=None):

container = PlotlyFigure(width, height, hide_toolbar)

lineplot, overlay = container.childNodes[0], container.childNodes[1]

if sync is None:

sync = container

class mover:

def __init__(self):

self.mousedown = False

def __call__(self, event):

if event.type == 'mousedown':

self.mousedown = True

if event.type == 'mouseleave':

self.mousedown = False

if event.type == 'mouseup':

self.mousedown = False

if self.mousedown:

x = float(lineplot._fullLayout.xaxis.p2c(event.layerX - lineplot._fullLayout.margin.l))

y = float(lineplot._fullLayout.yaxis.p2c(event.layerY - lineplot._fullLayout.margin.t))

sync.value = to_js([x, y])

dispatchEvent(sync)

e = mover()

overlay.addEventListener('mousemove', to_js(e))

overlay.addEventListener('mousedown', to_js(e))

overlay.addEventListener('mouseup', to_js(e))

overlay.addEventListener('mouseleave', to_js(e))

container.value = to_js([0., 0.])

return container

def PlotlyReactive(container, traces=[], layout={}, options={}):

full_layout = dict(width=int(container.style.width.replace('px', '')), height=int(container.style.height.replace('px', '')))

full_layout.update(layout)

full_options = {'displayModeBar' : not container.classList.contains('hidetoolbar')}

full_options.update(options)

plot = container.childNodes[0]

Plotly.react(plot, traces, full_layout, full_options)

def colorMap(t, cmap='inferno', cmin=None, cmax=None, scale='linear', res=100):

import matplotlib.cm as cm

if cmin is None:

cmin = tf.min(t)

if cmax is None:

cmax = tf.max(t)

t = (t - cmin) / (cmax - cmin)

if scale == 'log':

e = tf.exp(1)

t = t * (e - 1) + 1

t = tf.log(t)

cmap = Tensor(cm.get_cmap(cmap, res + 1)(range(res + 1)))

t = t * res

shape = t.shape

tflat = tf.reshape(t, [-1])

tfloor = tf.gather(cmap, tf.floor(tflat).cast('int32'))

tceil = tf.gather(cmap, tf.ceil(tflat).cast('int32'))

tfrac = tf.reshape(tflat - tf.floor(tflat), [-1, 1])

tflat = tfrac * tceil + (1. - tfrac) * tfloor

t = tf.reshape(tflat, list(shape) + [4])

return t

def plotTensor(t, canvas, size=None, cmap=None, interpolation='nearest', **kwargs):

if not (cmap is None):

t = colorMap(t, cmap, **kwargs)

if size is None:

size = (canvas.height, canvas.width)

if interpolation == 'bilinear':

t = tfbase.image['resizeBilinear'](t.value, list(size))

else:

t = tfbase.image['resizeNearestNeighbor'](t.value, list(size))

tfbase.browser['toPixels'](t, canvas)

from itertools import chain

import math

class Module:

def __init__(self):

self._submodules = dict()

self.eval = False

self._store = False

def parameters(self):

return chain.from_iterable(map(lambda x: x.parameters(), self._submodules.values()))

def __setattr__(self, name, value):

if isinstance(value, Module):

self._submodules[name] = value

super().__setattr__(name, value)

def __call__(self, *args, **kwargs):

value = self.forward(*args, **kwargs)

self._store = False

return value

def forward(self):

raise NotImplementedError()

def train(self):

self.eval = False

for sm in self._submodules.values():

sm.train()

def eval(self):

self.eval = True

for sm in self._submodules.values():

sm.eval()

def store(self):

self.store = True

for sm in self._submodules.values():

sm.eval()

class Parameter(Module):

def __init__(self, value):

super().__init__()

self.value = value

self.temp = None

self.grad = None

def parameters(self):

return [self]

class Sequential(Module):

def __init__(self, *args):

super().__init__()

self.sequence = []

for arg in args:

if isinstance(arg, Module):

self.sequence.append(arg)

else:

self.sequence.extend(arg)

self._submodules = {k: v for (k,v) in enumerate(self.sequence)}

def __getitem__(self, index):

return self.sequence[index]

def forward(self, X):

for m in self.sequence:

X = m(X)

return X

ModuleList = Sequential

class Sigmoid(Module):

def forward(self, X):

return tf.sigmoid(X)

class ReLU(Module):

def forward(self, X):

return tf.relu(X)

class Tanh(Module):

def forward(self, X):

return tf.tanh(X)

class Linear(Module):

def __init__(self, in_features, out_features):

super().__init__()

# Kaiming He initialization

self.W = Parameter(tf.randomNormal([in_features, out_features]) * math.sqrt((2 / out_features) / 3))

self.b = Parameter(tf.randomNormal([out_features]) * math.sqrt((2 / out_features) / 3))

self.input = None

def forward(self, x):

# Returns a new Matrix

self.input = None

return tf.dot(x, self.W) + self.b

class Optimizer:

def __init__(self, model, loss=None, store=False):

self.parameters = list(model.parameters())

self.model = model

self.loss = loss

self.store = store

def _grads(self, loss, *args, **kwargs):

def loss_internal(*params):

for val, param in zip(params, self.parameters):

param.temp = param.value

param.value = val

try:

l = loss(self.model, *args, **kwargs)

finally:

for param in self.parameters:

param.value = param.temp

param.temp = None

return l

return grads(loss_internal)(*map(lambda p: p.value, self.parameters))

def _step(self, grads):

raise NotImplementedError()

def step(self, *args, **kwargs):

grads = self._grads(self.loss, *args, **kwargs)

if self.store:

for grad, param in zip(grads, self.parameters):

param.grad = grad

return self._step(grads)

def stepWithLoss(self, loss, *args, **kwargs):

grads = self._grads(loss, *args, **kwargs)

return self._step(grads)

class SGD(Optimizer):

def __init__(self, model, loss, lr=0.001, store=False):

super().__init__(model, loss, store)

self.lr = lr

def _step(self, grads):

for grad, param in zip(grads, self.parameters):

param.value = param.value - self.lr * grad

`

return py;

}data = py`

# ${plots}

# Setup the data and prediction functions

import pandas as pd

df = pd.DataFrame(${mpg})[['weight', 'mpg']]

df = df.astype(float).dropna().values

x, y = df[:, :1], df[:, 1:]

x = Tensor((x - x.mean()) / x.std())

y = Tensor((y - y.mean()) / y.std()) > -0

def get_batch(batchsize, x, y):

return x, y

scale = Tensor([[1., 1.]])

def predict(w, x, y=None):

w = w.reshape((-1, 2)) * scale

x = x.reshape((-1, 1))

x = tf.concat([x, tf.onesLike(x)], 1)

if y is None:

return tf.sigmoid(tf.dot(x, w.T))

else:

y = y.reshape((-1, 1))

z = tf.dot(x, w.T)

return y * tf.sigmoid(z) + (1 - y) * tf.sigmoid(-z)

wrange = tf.linspace(-15, 5, 25)

brange = tf.linspace(-10, 10, 25)

ww, bb = tf.meshgrid(wrange, brange)

paramgrid = tf.stack([ww.flatten(), bb.flatten()]).T

eyetheta = 0

(x, y)

`

surfaces = py`

# ${batch} ${plots}

# Plot the loss surface

l1weight = 0.

l2weight = 0.

def loss(w, x, y):

w = w.reshape((-1, 2))

return (tf.mean(-tf.log(predict(w, x, y)), 0)) + l1weight * tf.abs(w).sum(1) + l2weight * (w ** 2).sum(1)

lossgrid = loss(paramgrid, x, y).reshape(ww.shape)

losscontour = plotconvert(dict(x=wrange, y=brange, z=lossgrid, type='contour', ncontours=25, showscale=False, ))

losssurface = plotconvert(dict(x=wrange, y=brange, z=lossgrid, type='surface', showlegend=False, showscale=False, opacity=0.8, contours=dict(x=dict(show=True), y=dict(show=True))))

`

py`

# ${surfaces}

cweights = ${weights}

startpoint = dict(x=[cweights[0]], y=[cweights[1]], mode='markers', showlegend=False, marker=dict(color='firebrick', size=10, line= {'color': 'black', 'width': 3}))

fullweightlist = [Tensor(cweights)]

batchweightlist = [Tensor(cweights)]

steps = int(${steps})

lr = float(${learningrate}) if steps > 0 else 0.

momentum = 0.

nxbatch, nybatch = batches[0]

batchgrad = grad(lambda t: loss(t, nxbatch, nybatch))(batchweightlist[-1])

beta = 0.

velocity = batchgrad

magnitude = batchgrad ** 2

if beta > 0:

batchgrad = batchgrad / tf.sqrt(magnitude + 1e-8)

for i, (nxbatch, nybatch) in zip(range(max(1, steps)), batches):

fullgrad = lr * grad(lambda t: loss(t, x, y))(fullweightlist[-1])

bgrad = grad(lambda t: loss(t, nxbatch, nybatch))(batchweightlist[-1])

velocity = momentum * velocity + (1 - momentum) * bgrad

magnitude = beta * magnitude + (1. - beta) * (bgrad ** 2)

batchgrad = velocity

if beta > 0:

batchgrad = velocity / tf.sqrt(magnitude + 1e-8)

fullweightlist.append((fullweightlist[-1] - fullgrad).flatten())

batchweightlist.append((batchweightlist[-1] - lr * batchgrad).flatten())

fullweights = tf.stack(fullweightlist)

batchweights = tf.stack(batchweightlist)

gradplot = dict(x=fullweights[:, 0], y=fullweights[:, 1], showlegend=False, line=dict(color='black'))

batchgradplot = dict(x=batchweights[:, 0], y=batchweights[:, 1], showlegend=False, line=dict(color='orange'))

zloss = loss(fullweights, x, y)

batchzloss = loss(batchweights, x, y)

threedgradplot = dict(x=fullweights[:, 0], y=fullweights[:, 1], z=zloss, showlegend=False, marker=dict(size=4), line=dict(color='black', width=4), type='scatter3d')

threedbatchgradplot = dict(x=batchweights[:, 0], y=batchweights[:, 1], z=batchzloss, showlegend=False, marker=dict(size=4), line=dict(color='orange', width=4), type='scatter3d')

finalloss = zloss[0].t2Js()

PlotlyReactive(lossplot, [losscontour, startpoint, gradplot, batchgradplot], {'title': 'Loss = %.3f' % finalloss, 'showlegend': False, 'xaxis': {'range': [-15, 5], 'title': 'Slope (w)'}, 'yaxis': {'range': [-10, 10], 'title': {

'text': 'Bias (b)'

}}})

`loss = py`

# ${batch}

# Plot the data scatterplot and prediction function

inweights = ${weights}

cweights = Tensor(inweights)

errors = -tf.log(predict(cweights, xbatch.reshape((-1,)), y).flatten())

losses = (errors)

batchdata = dict(x=xbatch.reshape((-1,)), y=ybatch.reshape((-1,)), mode='markers', marker=dict(color=tf.sqrt(losses), colorscale='RdBu', cmin=0, cmax=1))

xrange = tf.linspace(-2, 3, 50)

pfunction = dict(x=xrange.flatten(), y=predict(cweights, xrange).flatten(), line=dict(color='black'))

PlotlyReactive(scatterfig, [ batchdata, pfunction], {'title': 'p(y=1|x) = σ(%.2f x + %.2f)' % (inweights[0], inweights[1]), 'showlegend': False, 'xaxis': {'range': [-2, 3], 'title': {'text': 'x (weight)'}}, 'yaxis': {'range': [-1, 2], 'title': {'text': 'y (MPG)'} }})

histdata = dict(x=errors, type='histogram', xbins= dict(start=-3, end=3, size=0.15))

losses.mean()

`

batch = py`

# ${data}

batchsize = 0

batches = [get_batch(batchsize, x, y) for i in range(max(1, int(${steps})))]

xbatch, ybatch = batches[0]

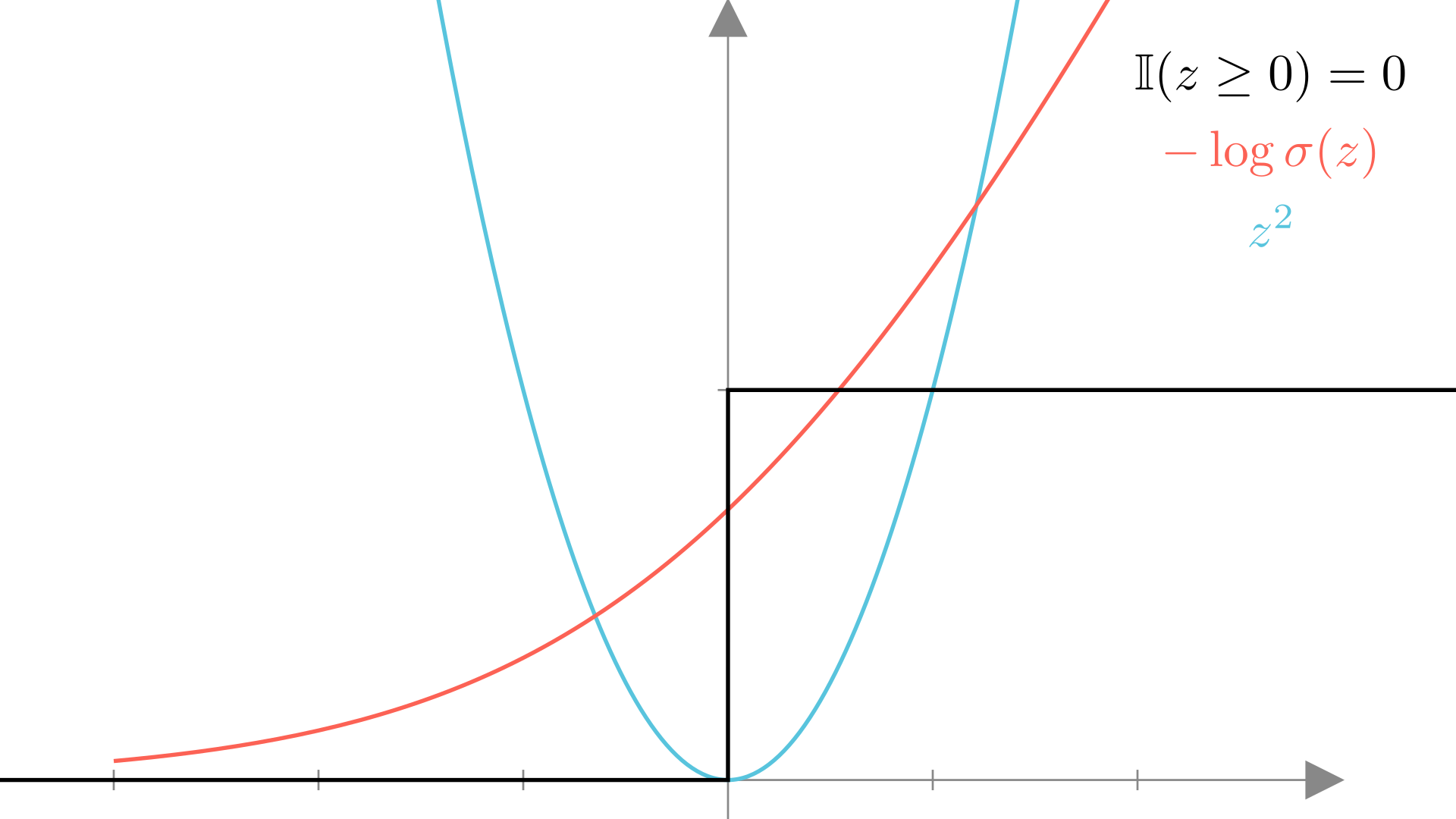

`Let’s look at how this loss function compares to the mean squared error loss we derived for logistic regression. One way to do this is to visualize the loss for a single observation as a function of the output of \(\mathbf{x}^T\mathbf{w}\). Here we’ll look at the loss for different models trying to predict an output of \(y=0\):

\[ \textbf{Let: }\ y=0, \quad z=\mathbf{x}^T\mathbf{w} \]

Manim Community v0.19.0

We see that the squared error loss is best when the output is exactly 0, while the logistic regression NLL wants the output of \(\mathbf{x}^T\mathbf{w}\) to be a negative as possible so that \(p(y=0\mid \mathbf{x}, \mathbf{w}) \longrightarrow 1\). Meanwhile the “accuracy” loss has no slope, making it impossible to optimize with gradient descent.

We’ve now seen a useful model for binary classification, but in many cases we want to predict between many different classes.

We will typically use a set of integers \(\{1, 2,...,C\}\) to denote the possible outputs for a general categorical function. Therefore we are considering functions of the form:

\[ y=f(\mathbf{x}), \quad \text{Input: } \mathbf{x} \in\mathbb{R}^n \longrightarrow \text{ Output: }y \in \{1, 2, ...,C\} \]

It’s important to note that we do not want to assume that the ordering of labels is meaningful. For instance if we’re classifying images of animals we might set the labels such that:

\[ \textbf{1: Cat},\quad \textbf{2: Dog},\quad \textbf{3: Mouse} \]

But this shouldn’t lead to different results to the case where we assign the labels as:

\[ \textbf{1: Dog},\quad \textbf{2: Mouse},\quad \textbf{3: Cat} \]

We call prediction of a categorical output with more than two possibilities multi-class classification.

A symmetric approach to defining a prediction function for multi-class classification is to define a separate linear function for each class and choose the class whose function gives the largest output.

If \(C\) is the number of possible classes, we will therefore have \(C\) different parameter vectors \(\mathbf{w}_1,…,\mathbf{w}_C\) and our prediction function will be defined as:

\[ f(\mathbf{x}) = \underset{c\in\{1...C\}}{\text{argmax}}\ \mathbf{x}^T\mathbf{w}_c \]

For convenience, we can also define a matrix that contains all \(C\) parameter vectors:

\[ \mathbf{W} = \begin{bmatrix} \mathbf{w}_1^T \\ \mathbf{w}_2^T \\ \vdots \\ \mathbf{w}_C^T\end{bmatrix} = \begin{bmatrix} W_{11} & W_{12} & \dots & W_{1d} \\ W_{21} & W_{22} & \dots & W_{2d} \\ \vdots & \vdots & \ddots & \vdots\\ W_{C1} & W_{C2} & \dots & W_{Cd} \end{bmatrix} \]

With this notation, our prediction function becomes:

\[ f(\mathbf{x}) = \underset{c\in\{1...C\}}{\text{argmax}}\ (\mathbf{x}^T\mathbf{W}^T)_c, \quad \mathbf{W} \in \mathbb{R}^{C\times d} \]

If we only have two classes \(0\) and \(1\), so \(C=2\), then this multi-class prediction function reduces to the same as our binary prediction function. We can see this by noting that \(x > y \equiv x-y>0\):

\[ f(\mathbf{x}) = \underset{c\in\{0,1\}}{\text{argmax}}\ (\mathbf{x}^T\mathbf{W}^T)_c = \mathbb{I}(\mathbf{x}^T\mathbf{w}_1 - \mathbf{x}^T\mathbf{w}_0 \geq 0) \]

If we factor out \(\mathbf{x}\) we see that we can simply define a new parameter vector in order to get the same decision rule.

\[ =\mathbb{I}(\mathbf{x}^T(\mathbf{w}_1 - \mathbf{w}_0) \geq 0) \quad \longrightarrow \quad \mathbb{I}(\mathbf{x}^T\mathbf{w} \geq 0), \quad \mathbf{w}=\mathbf{w}_1-\mathbf{w}_0 \]

It follows that the decision boundary between any two classes is also linear! We can see this by plotting a prediction function. In this case for the Iris dataset we saw in the homework.

As a first step towards finding the optimal \(\mathbf{W}\) for a multi-class model, let’s look at a distribution over multiple discrete outcomes: the Categorical distribution.

A categorical distribution needs to define a probability for each possible output. We’ll use \(q_c\) to denote the probability of output \(c\).

\[ p(y=c) = q_c, \quad y\in \{1...C\} \]

We can then denote the vector of all \(C\) probabilities as \(\mathbf{q}\). Note that in order for this to be valid, every probability needs to be in the range \([0,1]\) and the total probability of all outcomes needs to be \(1\), so:

\[ \mathbf{q} \in \mathbb{R}^C\quad q_c \geq 0\ \forall c\in \{1...C\}\quad \sum_{c=1}^C q_c=1 \]

As with the Bernoulli distribution, we can write this in a more compact form. Here we see that the probability of a given outcome is simply the corresponding entry in \(\mathbf{q}\)

\[ p(y)=\prod q_c^{\mathbb{I}(y=c)} = q_y \]

Thus the log-probability is simply:

\[ \log p(y) = \sum_{c=1}^C \mathbb{I}(y=c)\log q_c = \log q_y \]

With the Categorical distribution defined, we can now ask if we can use it to define a linear probabilistic model for multi-class categorical outputs. As with our other models we’ll consider making the distribution parameter a linear function of our input.

\[ y_i\sim \mathbf{Categorical}(\mathbf{q}=?), \quad \mathbf{q}=\mathbf{x}_i^T\mathbf{W}^T? \]

However, we once again run into the issue that the output of our linear function likely won’t satisfy the conditions we need for the parameter of a categorical distribution. In particular, the output is not guaranteed to be positive or to sum to \(1\).

\[ \mathbf{x}^T\mathbf{W}^T\in \mathbb{R}^C,\quad q_c \ngeq 0\ \forall c\in \{1...C\}, \quad \sum_{c=1}^C q_c\neq1 \]

In this case we need a way to map arbitrary vectors to vectors that satisfy these conditions:

\[ \textbf{Need }\ f(\mathbf{x}):\ \mathbb{R}^C \longrightarrow [0,\infty)^C,\ \sum_{i=1}^Cf(\mathbf{x})_c = 1 \]

Such a mapping exists in the softmax function. This function maps vectors to positive vectors such that the entries sum to \(1\). Entry \(c\) of \(\text{softmax}(\mathbf{x})\) can be written as:

\[ \text{softmax}(\mathbf{x})_c = \frac{e^{x_c}}{\sum_{j=1}^Ce^{x_j}} \]

We can also define the softmax function using vector notation as:

\[ \text{softmax}(\mathbf{x}) = \begin{bmatrix}\frac{e^{x_1}}{\sum_{j=1}^Ce^{x_j}} \\ \frac{e^{x_2}}{\sum_{j=1}^Ce^{x_j}} \\ \vdots \\ \frac{e^{x_C}}{\sum_{j=1}^Ce^{x_j}} \end{bmatrix} \]

Intuitively, \(e^x\) is positive for any \(x\), while dividing by the sum ensure the entries sum to 1 as:

\[ \sum_{i=1}^C \frac{e^{x_i}}{\sum_{j=1}^Ce^{x_j}} = \frac{\sum_{i=1}^C e^{x_i}}{\sum_{j=1}^Ce^{x_j}} = 1 \]

The softmax function also has the nice property that

\[ \underset{c\in\{1,...,C\}}{\text{argmax}}\ \mathbf{x}_c = \underset{c\in\{1,...,C\}}{\text{argmax}}\ \text{softmax}(\mathbf{x})_c \]

With the softmax function we can now define our probabilistic model for categorical labels as:

\[ y_i\sim \mathbf{Categorical}\big(\text{softmax}(\mathbf{x}^T\mathbf{W})\big) \]

We see that under this assumption, the probability of a particular output \((c)\) is:

\[ p(y_i=c \mid \mathbf{x}, \mathbf{W}) = \text{softmax}(\mathbf{x}^T\mathbf{W})_c=\frac{e^{\mathbf{x}^T\mathbf{w}_c}}{\sum_{j=1}^Ce^{\mathbf{x}^T\mathbf{w}_j}} \]

We call this particular probabilistic model: multinomial logistic regression

We now have everything we need to define our negative log-likelihood loss for the multi-class classification model. Once again our loss is the negative sum of the log-probability of each observed output:

\[ \textbf{Loss}(\mathbf{W}) =\textbf{NLL}(\mathbf{W}, \mathbf{X}, \mathbf{y})=- \sum_{i=1}^N \log p(y_i \mid \mathbf{x}_i, \mathbf{W}) \]

Using the log-probability of the multinomial logistic regression model we get:

\[ \textbf{NLL}(\mathbf{W}, \mathbf{X}, \mathbf{y})= -\sum_{i=1}^N \log\ \text{softmax}(\mathbf{x}_i^T\mathbf{W}^T)_{y_i} = -\sum_{i=1}^N \log \frac{e^{\mathbf{x}_i^T\mathbf{w}_{y_i}}}{\sum_{j=1}^Ce^{\mathbf{x}_i^T\mathbf{w}_{j}}} \]

We can simplify this further to:

\[ \textbf{NLL}(\mathbf{W}, \mathbf{X}, \mathbf{y})=-\sum_{i=1}^N \bigg(\mathbf{x}_i^T\mathbf{w}_{y_i}- \log\sum_{j=1}^Ce^{\mathbf{x}_i^T\mathbf{w}_{j}}\bigg) \]

In this case our parameters are a matrix \(\mathbf{W}\). The concept of a gradient, extends naturally to a matrix; we simply define the gradient matrix such that each element is the partial derivative with respect to the corresponding element of the input. For the multinomial logistic regression loss, the gradient this looks like:

\[ \nabla_{\mathbf{W}} \mathbf{NLL}(\mathbf{W}, \mathbf{X}, \mathbf{y})= \begin{bmatrix} \frac{\partial \mathbf{NLL}}{\partial W_{11}} & \frac{\partial \mathbf{NLL}}{\partial W_{12}} & \dots & \frac{\partial \mathbf{NLL}}{\partial W_{1C}} \\ \frac{\partial \mathbf{NLL}}{\partial W_{21}} & \frac{\partial \mathbf{NLL}}{\partial W_{22}} & \dots & \frac{\partial \mathbf{NLL}}{\partial W_{2C}} \\ \vdots & \vdots & \ddots & \vdots\\ \frac{\partial \mathbf{NLL}}{\partial W_{d1}} & \frac{\partial \mathbf{NLL}}{\partial W_{d2}} & \dots & \frac{\partial \mathbf{NLL}}{\partial W_{dC}} \end{bmatrix} \]

We can still apply the same gradient descent updates in this case!

\[ \mathbf{W}^{(i+1)} \leftarrow \mathbf{W}^{(i)} - \alpha \nabla_{\mathbf{W}} \mathbf{NLL}(\mathbf{W}^{(i)}, \mathbf{X}, \mathbf{y}) \]